Learn to Design a Voice User Interface

Learning Objectives

After completing this unit, you’ll be able to:

- Identify differences between voice user interface design and other user interfaces.

- Describe key characteristics of good conversational design.

Voice User Interfaces Compared to Other Interfaces

In comparing with a graphical user interface (GUI), voice and conversations present new challenges when the end user can’t see the options available. A simple example of this is planning a trip. When you plan a trip online with a website, it’s fairly simple to enter data into a series of boxes to represent where you’re leaving from, where you’re going to, what you’re going to do, and the date.

If you were to try to do this from a typical automated phone system, it can sound something like this: “If this is a domestic trip, press 1. If this is an international trip, press 2. If you are going hiking, press 3. If you are going surfing, press 4.” Is this a realistic option? No, not especially, but it helps to highlight some of the challenges of traditional automation.

Now let’s examine a conversational interface. If you were to call and set up a trip with a person, the conversation can go something like this:

- Travel planner : “What are we doing for your next trip?”

- You : “Well, I want to go hiking in Hawaii.”

- Travel planner : “Sounds exciting! When do you want to go, and where are you leaving from?”

- You : “Let’s plan for a month away; I’m leaving from Seattle.”

Having natural responses and being able to handle inputs that aren’t quite what you expected are key factors that make conversational interfaces so powerful and intuitive. Using Alexa, building a conversational interface like this is a part of the design process that we want to explicitly design and plan.

So how do you go about building a voice user interface? There are many important things to consider—here are some guidelines to get started.

Understanding the Voice Design Process

The first and most important step to designing a voice user interface is to clearly establish the skill’s purpose. Knowing what you can, and can’t, do with voice is critical to the skill’s success. Once you know what you want to do, creating scripts and flows can help you think through the different details and variations for the interactions.

An important part of this process is to write for how people talk, not how they read and write. Visualize the two sides of the conversation, and read them out loud to see how they flow.

You can check out the Alexa Design Guide for more information on this topic.

Consider What Users Can Say

Conversational UI consists of turns starting with a person saying something, followed by Alexa responding. This is a new form of interaction for many people, so make sure that you’re aware of the ways in which users participate in the conversation so that you can design for it. A great voice experience allows for the many ways people can express meaning and intent.

Before we go further, let’s get a quick introduction to the important terms and concepts in how users interact with Alexa.

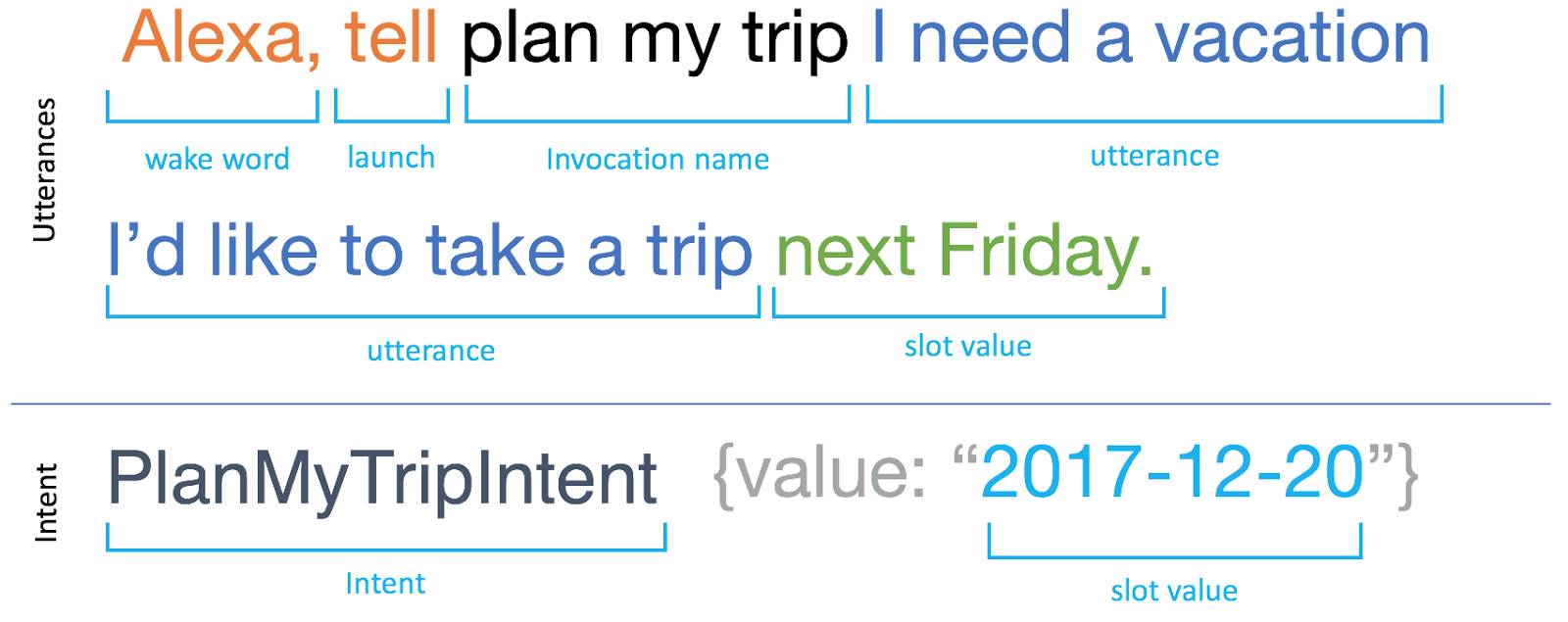

With your scripts created during the design process, you can begin to break down your expected utterances into intents .

Let’s quickly recap the key parts of this example.

- Wake word —this is the key for Alexa to start listening.

- Launch —a connector word to link the wake word and invocation name. Supported words include: ask, tell, open, launch, run, begin, and more.

- Invocation name —this is a custom phrase that customers say to invoke your skill. It is generally two–three words long and closely related to the skill’s functionality.

- Utterance —this is the specific phrase that the user wants to take action on with the skill.

- One-shot utterance —the top example is a one-shot utterance, where all information is given at once and fully satisfies what is needed to activate an intent.

- Slot value —a variable part of an utterance. In our travel-planning scenario, the starting location, destination, activity, and date are all slot values.

- Intent —an action that the skill can handle. A single intent can have many different utterances, with or without slots to account for what users may say.

In order to map what people say to the proper intents, it’s important to provide examples of different types of utterances that people can say. For example, include partial information, synonyms, and even extra information from users.

- I’d like to take a trip next Friday.

- I guess I want to go next Friday.

- I want to go hiking next Friday. Note : In this example, the user provided an extra slot value for the activity they want to do in addition to the date.

This applies even to answers to questions that you can ask. For example, if there is a confirmation from Alexa stating, “You wanted to go to Hawaii from Seattle next Friday, right?”, the user can respond with any of the following that can be handled differently.

- No, I want to leave in 2 months.

- No, I’m leaving from Portland.

- Uh, yeah, that’s right.

Pro tip : Your skill doesn’t need to list out all possible utterances in order to capture the right intent, but the more examples that you have listed, the better the skill performs.

Plan How Alexa Responds

There are a number of best practices to consider when designing Alexa’s responses to customers. For example, a response should be brief enough to say in one breath. Longer responses tend to be more difficult to follow and answer. Just think of a verbose family member at your last get-together, never really pausing to let you get a thought in.

It’s also important to craft the response to sound like a real person. Use contractions; it’s OK! Don’t use too much jargon though, that sounds more robotic.

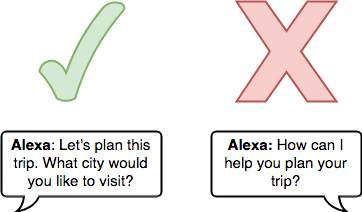

Don’t leave things too open-ended. If it’s unclear where to go next, users will not have a smooth experience using the skill. Prompt with guidance to coach the user on what to say next.

Consider adding variety and a little bit of humor in with your responses. There are several methods to help interject a variety of pauses, emphasize words, whisper, and more, using Speech Synthesis Markup Language (SSML).

Alexa should also prompt users when needed and provide conversation markers like first, then, and finally. And Alexa should have responses for the unexpected—for example, when Alexa doesn’t hear or understand the user.

One fun activity to see if your voice design sounds natural is to role-play as Alexa. You need two other people to help with this. Have one person be your user, interacting with Alexa (you). Your job is to use your script and reply as Alexa. Have the other person act as a scribe to help note any responses that sound awkward and capture utterances from your user that you weren’t expecting.

This is only the tip of the iceberg when it comes to designing voice user interfaces but it can put you on the right track toward building a skill that sounds natural. Next up, we talk more about the actual nuts and bolts that go into skill building.