Distribute Traffic with Elastic Load Balancing

Learning Objectives

After completing this unit, you’ll be able to:

- Describe the benefits of load balancing.

- Differentiate between Application Load Balancer, Network Load Balancer, and Classic Load Balancer.

Imagine you’re a video blogger who uses Amazon Elastic Compute Cloud (Amazon EC2) instances in different regions to support your website. You recently posted a video of your dog doing something funny. Soon after, a local news channel shared the video and it went viral.

Now your website is getting an increased number of requests within the local area while other regions have excess bandwidth. If only there was a way to use that excess bandwidth in other places to help with your local demand.

What Is Elastic Load Balancing?

Elastic Load Balancing automatically distributes traffic across multiple targets to ensure optimum performance in the event of a traffic spike. Some examples of these targets include Amazon EC2 instances, containers, and IP addresses.

Elastic Load Balancing can detect unhealthy targets, stop sending traffic to them, and then spread the load across the remaining healthy targets. It provides integrated certificate management and Secure Sockets Layer (SSL) decryption, allowing you the flexibility to centrally manage the SSL settings of the load balancer and offload CPU-intensive work from your application.

Elastic Load Balancing offers four types of load balancers.

- Application Load Balancer

- Network Load Balancer

- Gateway Load Balancer

- Classic Load Balancer

You can select the appropriate load balancer based on your needs.

Route HTTP and HTTPS Traffic with an Application Load Balancer

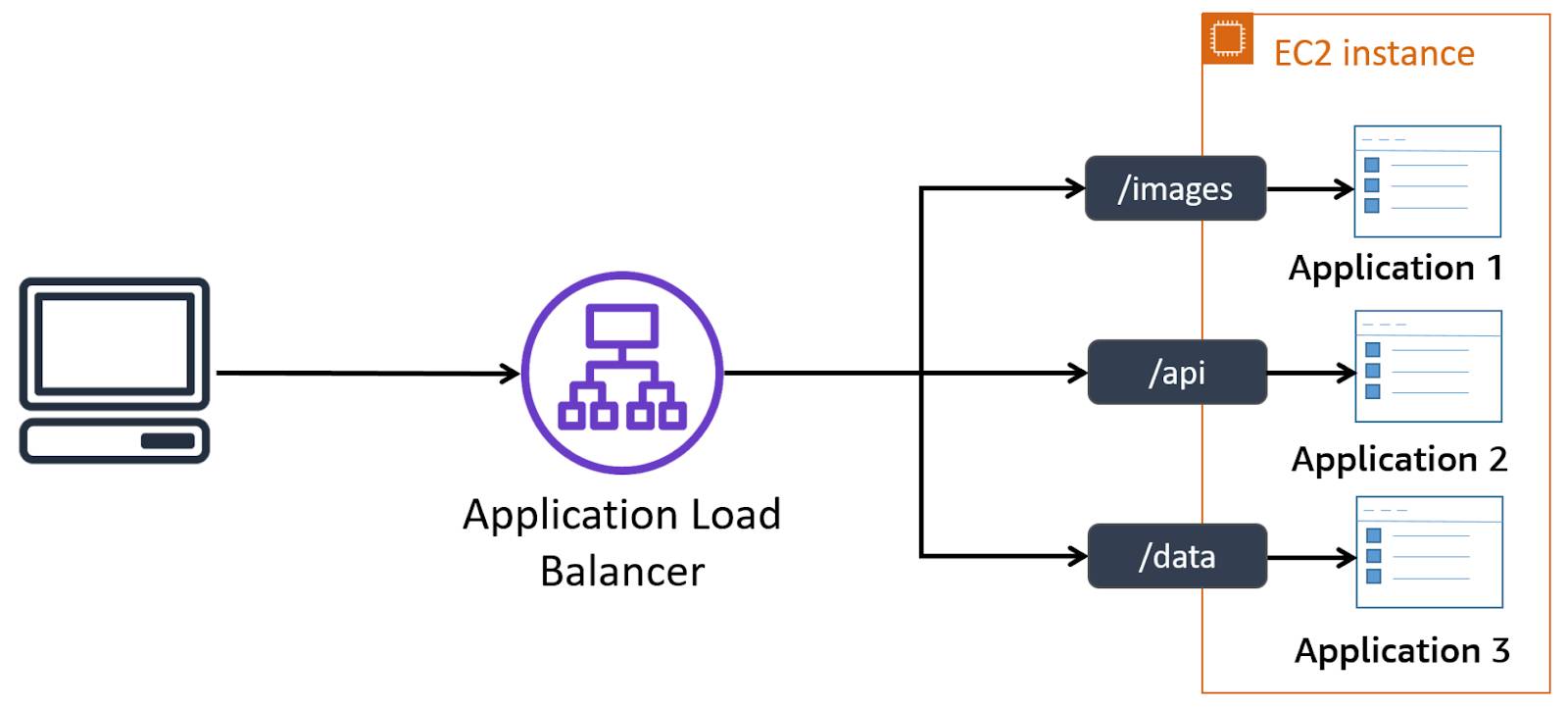

An Application Load Balancer can be used to make intelligent routing and load-balancing decisions for HTTP and HTTPS traffic. For example, suppose that you have broken up a monolithic legacy application into smaller microservices, hosted under the same domain name. You can use a single Application Load Balancer to route requests to the correct target service.

The key difference between the Application Load Balancer and other types of load balancers is that it reads the HTTP and HTTPS headers inside packets and uses this information to intelligently spread the load to targets.

Application Load Balancers support a pair of open standard protocols (WebSocket and HTTP/2), and provide additional visibility into the health of the target instances and containers. Websites and mobile apps running in containers or on EC2 instances benefit from the use of Application Load Balancers.

Scale by Millions with a Network Load Balancer

A Network Load Balancer can handle volatile workloads and can scale to millions of requests per second. Network Load Balancers preserve the source address of connections, making their use transparent to applications and allowing normal firewall rules to be used on targets.

Network Load Balancers support Transmission Control Protocol (TCP) or User Datagram Protocol (UDP) traffic to targets for the life of the connection, making them a great fit for Internet of Things (IoT), gaming, and messaging applications.

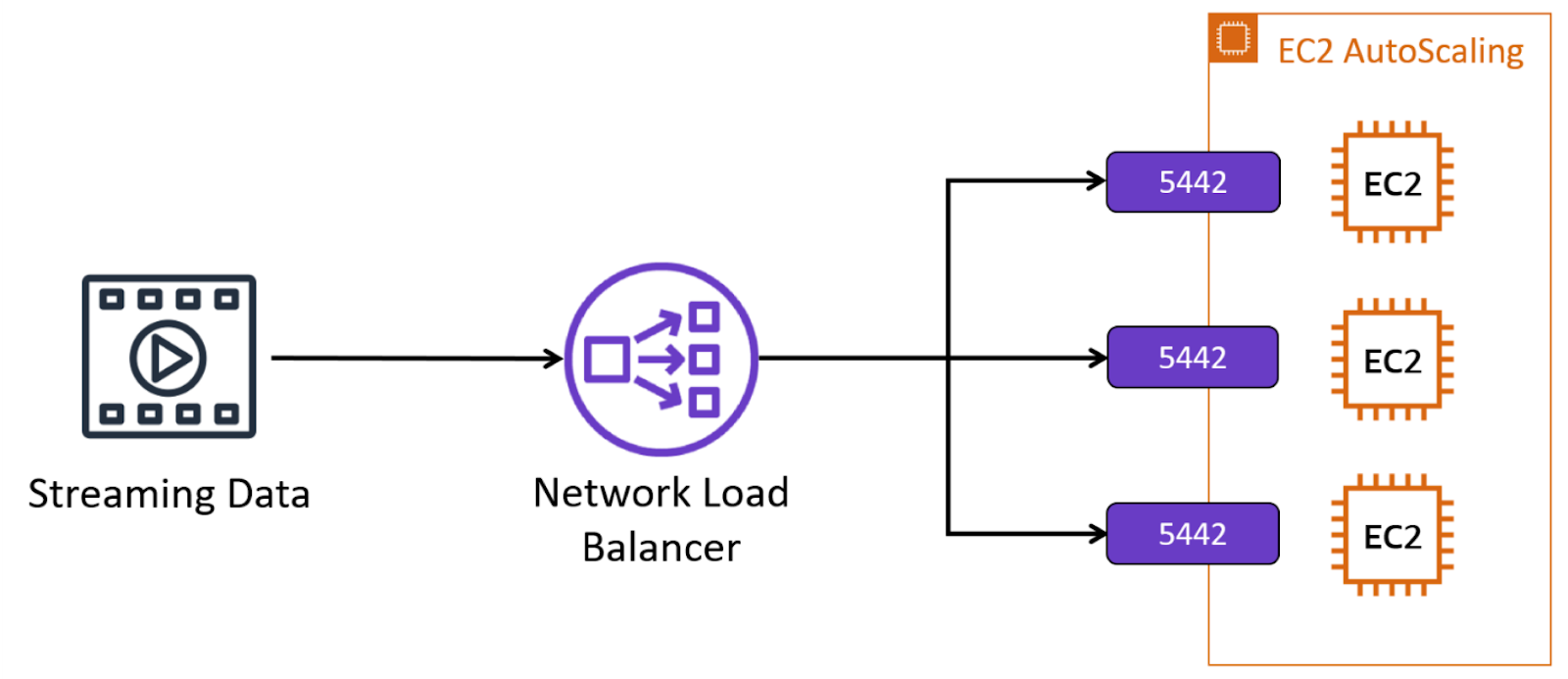

For example, you can use a Network Load Balancer to automatically route incoming web traffic across a dynamically changing number of instances. Your load balancer can act as a single point of contact for all incoming traffic to the instances in your Auto Scaling group.

You can automatically increase the size of your Auto Scaling group when demand goes up and decrease it when demand goes down.

To illustrate this concept, the diagram below shows the Auto Scaling group adding and removing Amazon EC2 instances. The Network Load Balancer ensures that the traffic for your application is distributed across all of your instances.

Network Load Balancer features include:

- The ability to handle tens of millions of requests per second while maintaining high throughput at ultra-low latency, with no manual effort

- Routing connections to targets (for example, Amazon EC2 instances, containers, and IP addresses) based on IP protocol data

- API-compatibility with an Application Load Balancer

- Optimization for handling sudden and volatile traffic patterns while using a single static IP address per Availability Zone

Distribute Incoming Traffic Across Multiple Resources with Gateway Load Balancer

Gateway Load Balancer is a network component that distributes incoming traffic across multiple servers or resources. Gateway Load Balancer specializes in managing and scaling network security and analysis tools to help improve traffic inspection, thread detection, and overall availability of resources.

Gateway Load Balancer operates at the third layer of the Open Systems Interconnection (OSI) model and acts as a VPC endpoint between service provider VPC and consumer VPCs. Gateway Load Balancer listens for all packets across all ports and forwards traffic to the target group that's specified in the listener rule. This helps with scaling traffic based on specific listener rules, centralizing traffic management, and enhancing overall network security in the cloud.

Classic Load Balancer

The Classic Load Balancer provides basic load balancing across multiple Amazon EC2 instances and operates at both the request level and connection level. It is the legacy Elastic Load Balancing tool intended for applications that were built within the EC2-Classic network.

Unlike the Classic Load Balancer, the Application Load Balancer and Network Load Balancer are purpose-built and offer more robust features to meet specific needs.

In the next unit, you learn about application integration services.

Additional Resources