Review Data Ingestion and Modeling Phases

Learning Objectives

After completing this unit, you’ll be able to:

- Examine how data is ingested into Data Cloud.

- Configure key qualifiers to help interpret ingested data.

- Apply basic data modeling concepts to your account.

Data Ingestion

In the overview, we indicated that the data is first ingested from the source and stored in our system in a data lake object, but we didn’t get into the details of how you connect and access data in the source system. Data is retrieved from the source by way of connectors that establish communication between servers, so your data can be continually accessed. Data Streams assist the connectors in that they dictate how often and when the connections should be established.

The following connectors are available to Data Cloud users currently, with many more connectors planned in the future.

- Cloud Storage Connector

- Google Cloud Storage Connector

- B2C Commerce Connector

- Marketing Cloud Personalization Connector

- Marketing Cloud Engagement Data Sources and Connector

- Salesforce CRM Connector

- Web and Mobile Application Connector

Let's review each.

Cloud Storage Connector

This option creates a data stream from data stored on an Amazon Web Services S3 location. The connector accommodates custom data sets and you have the option to retrieve data hourly, daily, weekly, or monthly. As with custom data sets, the connector completes the import step, and you subsequently map the data to the model.

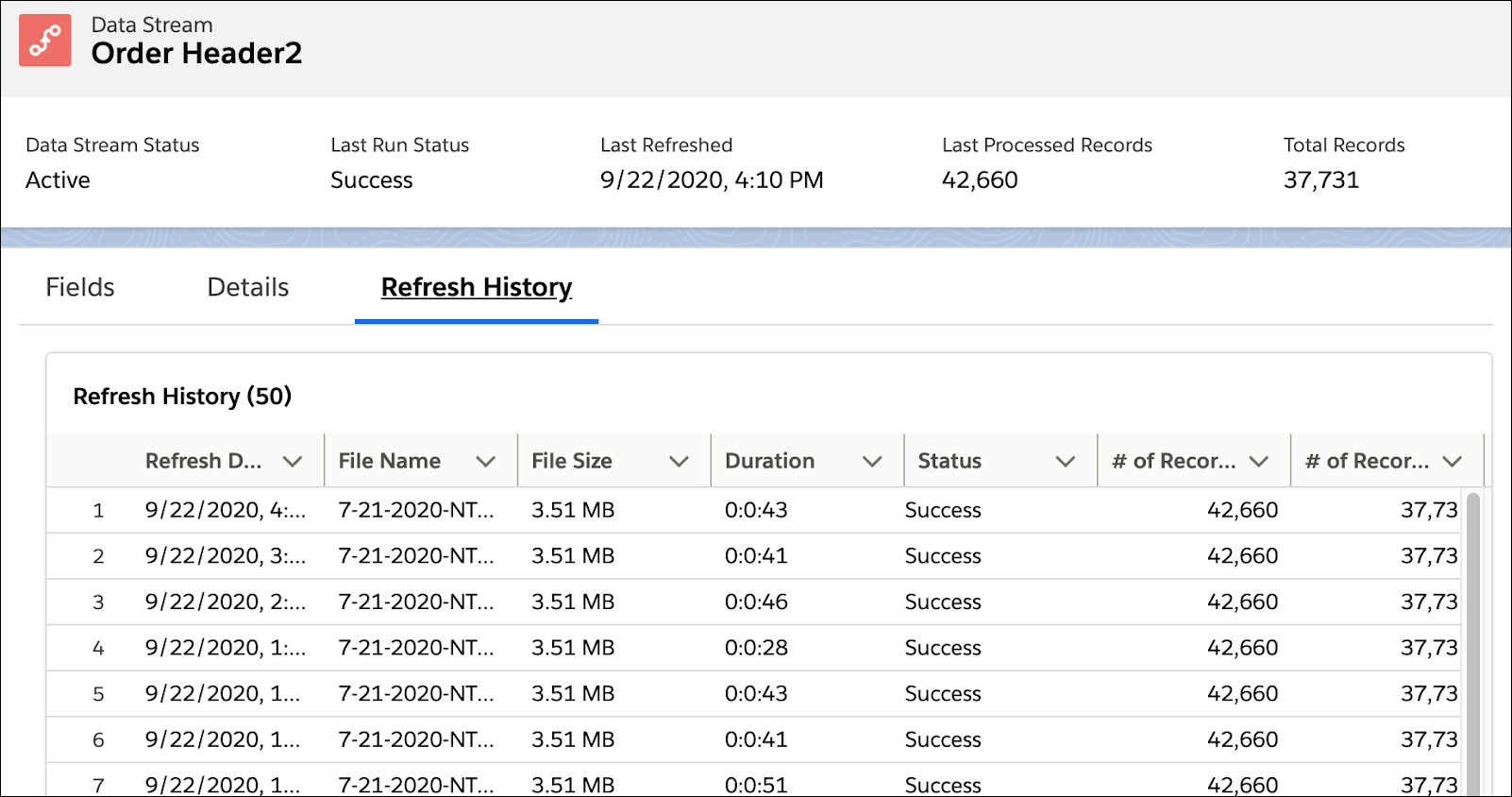

For any one of these connectors, the Refresh History tab is a good resource to validate that the data is being retrieved at the expected cadence and without errors. Should there be a retrieval error, the Status column (shown in the following image) provides more information about the error,

Google Cloud Storage Connector

This connector ingests data from Google Cloud Storage (GCS), an online file-based storage web service on Google Cloud Platform infrastructure. Data Cloud reads from your GCS bucket and periodically performs an automated data transfer of active objects to a Data Cloud-owned staging environment for data consumption.

B2C Commerce Connector

This connector ingests data from a B2C Commerce instance and creates a B2C Commerce data stream.

Marketing Cloud Personalization Connector

This connector helps you ingest user profiles and behavioral events from Marketing Cloud Personalization in Data Cloud. You can also use Marketing Cloud Personalization as an activation target. This connector requires that you create a starter bundle.

Marketing Cloud Engagement Data Sources and Connector

Data Cloud provides starter data bundles that give you predefined data sets for email and mobile (including Einstein Engagement data). Because these are all known system tables, these bundles take you all the way from importing the data set as-is to introducing it automatically to the data model layer. Which means within a few clicks, you are ready to get to work on your business use cases. The behavioral, engagement-oriented data sets retrieved by these connectors are refreshed hourly; the profile data sets are refreshed daily.

You can also access custom data sets via Marketing Cloud Engagement Data Extensions. For example, you can use this connector to ingest ecommerce or survey data that you’ve already imported into Marketing Cloud Engagement. Simply provision your Marketing Cloud Engagement instance in Data Cloud after which point you’ll see the list of Data Extensions that can be brought in. Depending on how you choose to export your data extension from Marketing Cloud Engagement—Full Refresh or New/Updated Data Only—the data will be retrieved by the connector daily for the former option or hourly for the latter option. Keep in mind that unlike the starter data bundles, which both import the data and model the data for you, with this connector you must complete the modeling step yourself, since the data set is custom.

Salesforce CRM Data

Once you authenticate your Sales and Service Cloud instance, you can choose one object per data stream to connect to your Data Cloud account, either by selecting from a list of available objects or searching. The data is refreshed hourly, and once a week there’s also a full refresh of the data.

Web and Mobile Connector

This connector captures online data from websites and mobile apps. Data Cloud offers canonical data mappings for both web and mobile instances to facilitate ingestion, which you can then query and activate across both mobile and email.

Ingestion API

If you want to customize how you connect to other data sources, you can use the Ingestion API to create a connector, upload your schema, and create data streams into your org. These streams can update incrementally or in bulk, depending on how you configure your API requests.

Extend Your Data

Connectors fetch the original shape of the data by retrieving the full source field list, and you can create additional calculated fields if you choose. For example, if the connector retrieves an age field as a raw number and you want to band the data into age groups like 18–24 years, 25–34 years, 35–44 years, 45+ years, you can achieve that by adding a new formula. The formula is a combination of IF statements as well as <and, or> operators—to the data lake object that is derived from the age source field.

There are several formula functions you can use. They fall into four categories.

- Text manipulation

- For example: EXTRACT(), FIND(), LEFT(), SUBSTITUTE()

- Type conversions

- For example: ABS(), MD5(), NUMBER(), PARSEDATE()

- Date calculations

- For example: DATE(), DATEDIFF(), DAYPRECISION()

- Logical expressions

- For example: (IF(), AND(), OR(), NOT()

Configure Key Qualifiers

Use Fully Qualified Keys (FQK) to avoid key conflicts when data from different sources are ingested and harmonized in the Data Cloud data model. Each data stream is ingested into Data Cloud with its specific keys and attributes. When multiple data streams are harmonized into a single data model object (DMO), the various keys can conflict and records can have the same key values. Fully qualified keys avoid conflicts by adding key qualifier fields and interpreting the data accurately. A fully qualified key consists of a source key, such as a contact ID from CRM or a subscriber key from Salesforce Marketing Cloud Engagement, and a key qualifier.

Configure key qualifier fields for all data lake object (DLO) fields that contain a key value. The field can be a primary key or a foreign key field. Let’s look at an example to see how harmonized data is interpreted with and without key qualifiers.

Let’s say you have two data streams with related data lake objects (DLO) for profile data: Contacts DLO from Salesforce CRM and Subscribers DLO from Salesforce Marketing Cloud Engagement. The records for these DLOs are mapped to the Individual DMO.

Now, you want to join the Individual DMO to the Engagement DMO to identify individuals with a minimum of two clicks. After harmonization of data, the two data streams are mapped to the Individual DMO in Data Cloud. The Contacts DLO has three records, and the Subscribers DLO has two records. So the Individual DMO, which contains all the records from all the mapped data streams, has five records.

Marketing Cloud Engagement is using the Contact ID from the CRM org as the primary key (Subscriber Key). So, there are multiple records in the Individual DMO with the same value for Individual ID which is the primary key field.

Next, let’s consider the Email Engagement DMO, which contains email engagement data that was ingested from Salesforce Marketing Cloud Engagement. The Individual DMO and Email Engagement DMO have a 1:N relationship through the Individual ID.

When you join the Individual DMO and the Email Engagement DMO, Data Cloud interprets the combined data set as the first row of Individual 2 having one click and the second row of Individual 2 having one click. In other words, Individual 2 is assumed to have two email clicks. But in actuality, Individual 2 has only one click action, although Data Cloud interprets it as two click actions.

This misinterpretation can create an issue when this data is queried, including segmentation, calculated insights, and query API. If you run a query and ask for individuals who have a minimum of two click actions, Individual 2 is returned in the response. This issue occurs even when profile unification is deployed, because engagement data is always joined to the Individual DMO.

When you add key qualifier fields to all your DLO fields that contain a key value, either a primary key or a foreign key, Data Cloud interprets the data ingested from different data sources correctly. In this example, key qualifiers are added to the DLOs from Salesforce CRM and Marketing Cloud Engagement. The Individual DMO includes the key qualifier field indicating where the record was sourced from.

When the Individual DMO and Email Engagement DMO are joined, the table join uses both the foreign key field (Individual ID) and the key qualifier field (KQ_ID), which enables Data Cloud to interpret the data accurately.

When you run the same query for Individuals who have a minimum of two click actions, data for Individual 2 doesn’t meet the query criteria, and it isn’t returned in the query response. Use key qualifier fields in calculated insights (CI), segmentation, and Query API to identify, target, and analyze customer data accurately.

Data Modeling

We mentioned that once all the data streams are ingested into the system, there’s a source-to-target mapping experience that utilizes the Customer 360 Data Model to normalize the data sources. For example, you can use the Customer 360 Data Model’s notion of an Individual ID to tag the source field corresponding to the individual who purchased a device (one data stream), called about a service issue (another data stream), received a replacement (yet another data stream)—and then review every event in the customer journey (yep, one more data stream). Data mapping helps you draw the lines between the applicable fields in the data sources to help tie everything together. Pay special attention to attributes like names, email addresses, and phone numbers (or similar identifiers). This information helps you link an individual’s data together and ultimately build a unified profile of the customer. But everything has a place and a connection to something else—you just need to draw the line.

The Customer 360 Data Model is designed to be extensible both by adding more custom attributes to an existing standard object and by adding more custom objects. When you utilize standard objects, relationships between objects light up automatically when the fields relating the two objects are both mapped. In a later unit, we walk you through an example of when you might need to define the relationship between objects in cases where you have added custom objects to your model.

Now that you’re familiar with the basic concepts of data ingestion and modeling, you’re ready to move on to concrete examples.