Learn About the Unique Risks of Artificial Intelligence

Learning Objectives

After completing this unit, you’ll be able to:

- Explain the need for managed trust in artificial intelligence (AI) systems.

- Compare AI security and AI safety.

- Discuss the implications of AI infrastructure on cybersecurity.

Engage with Artificial Intelligence in Different Settings

Sample Scenarios

Scenario 1: You're a curious early adopter, excited about purchasing your first autonomous vehicle. You sit behind the wheel, ready to experience pioneering technology at work. The car, boasting a spotless safety record, smoothly navigates the streets. But then, at a busy intersection, the car hesitates, seemingly confused by the traffic patterns. You grip the steering wheel, heart pounding, as you realize the AI isn’t responding as it should.

Scenario 2: You work at a high-profile social media company monitoring the AI algorithms that have efficiently curated content for millions of users. You start to notice a disturbing trend—the AI seems to be amplifying divisive and harmful information, stifling diverse perspectives and fueling online conflicts. Despite your efforts to intervene, the AI behavior persists.

Scenario 3: You’re a patient anxiously awaiting a diagnosis. An AI-powered medical tool recognized for its accuracy analyzes your symptoms and medical history, but the results are confusing. You’re flagged as “low risk” despite experiencing alarming symptoms, leaving you less confident in your ability to interpret your body’s signals.

Real-World Risks of AI

These scenarios highlight the potential risks of AI, even when its past behavior has been dependable and trustworthy. AI systems, despite their sophistication, can still make mistakes or act in unexpected ways, often without clear warning signs. This is especially troubling when it comes to cybersecurity because we are conditioned to trust the systems designed to protect us and our organizations.

Consider real-world settings like hospitals, schools, and emergency services. We often put our trust in professionals like doctors, teachers, and firefighters to perform these important jobs. Similarly, a comprehensive global study on Trust in artificial intelligence suggests that AI is more trusted when used in contexts related to healthcare and security, compared to areas such as hiring or making personalized recommendations.

When AI is deployed as a protective measure—as it is in cybersecurity tools or health tracking systems—people may instinctively trust its decisions, even if those decisions are inaccurate or potentially harmful. For instance, if an AI cybersecurity tool misinterprets data or acts outside its intended parameters, the consequences are significant and could result in a security breach.

The challenge lies in recognizing that the trust we inherently place in things designed to protect us can sometimes be misplaced. This doesn't mean we should replace trust with distrust or fear. Instead, it means shifting from unquestioned trust to a more informed awareness–one that supports active engagement, continuous risk assessment, and management. This balanced approach allows us to enjoy the benefits of AI while remaining mindful of its potential risks in our personal lives and in professional settings.

AI Safety vs. AI Security

The aforementioned scenarios highlight issues related to AI safety that focus on ensuring AI systems behave in ways that align with human values, ethical standards, and societal well-being. For example, in the autonomous vehicle scenario, the AI’s hesitation at a busy intersection raises concerns about the system's ability to make reliable decisions. This could put the driver and others on the road at risk, affecting public trust in AI technology. In the medical diagnosis scenario, the AI’s failure to correctly assess the patient’s symptoms demonstrates the potential risk to individual safety and the trustworthiness of AI in critical healthcare situations.

The scenarios also reveal areas where AI security may be a concern. AI security involves the protection of AI systems from malicious attacks, unauthorized access, and other cybersecurity threats that could compromise a system’s integrity and availability. For example, in the social media scenario, a malicious actor may have poisoned the AI algorithm by injecting false or misleading data, causing the AI to spread disinformation and fuel conflict.

This can erode trust and degrade the platform’s integrity. Learn more about AI security in the Artificial Intelligence and Cybersecurity module, which outlines the various security risks to AI. The module explains how AI can expand the threat landscape by introducing new vulnerabilities that malicious actors may exploit. This module also covers techniques that cybersecurity professionals can use to address these security risks and uphold ethical principles.

While they are defined differently, we consider them both as we continue our discussion around risk management for AI systems. Now let’s take a look at the underlying technologies and processes that enable AI-powered systems to function.

Beyond Traditional Software

AI is a branch of computer science that aims to create systems capable of performing tasks that typically require human intelligence. This includes learning from experience, understanding natural language, recognizing patterns, making decisions, and even applying a degree of creativity. But why does AI require a different level of caution compared to traditional software?

Consider the browser you’re using to read this module, or the apps you’ve downloaded to stream videos or get directions in an unfamiliar city. These software tools are predictable and consistent: You press a key, click a button, or give verbal instructions, and the software executes the command.

AI is a different kind of software. For example, an AI-powered word processing software application may correct your spelling and grammar, remember what you’ve written, analyze the emotional tone of the document, and even suggest ways to make your writing more persuasive or empathetic, depending on your audience. It can do all of this because it learns and adapts over time through a “training” process.

Training is a process of continuous updates where the AI model refines its patterns and protocols based on the data it processes. When exposed to new data, AI can adjust or change its behavior and predictions—sometimes in ways that surprise us. This unpredictability is both its defining feature and the reason it requires responsible oversight.

A Tale of Two Software Engineers

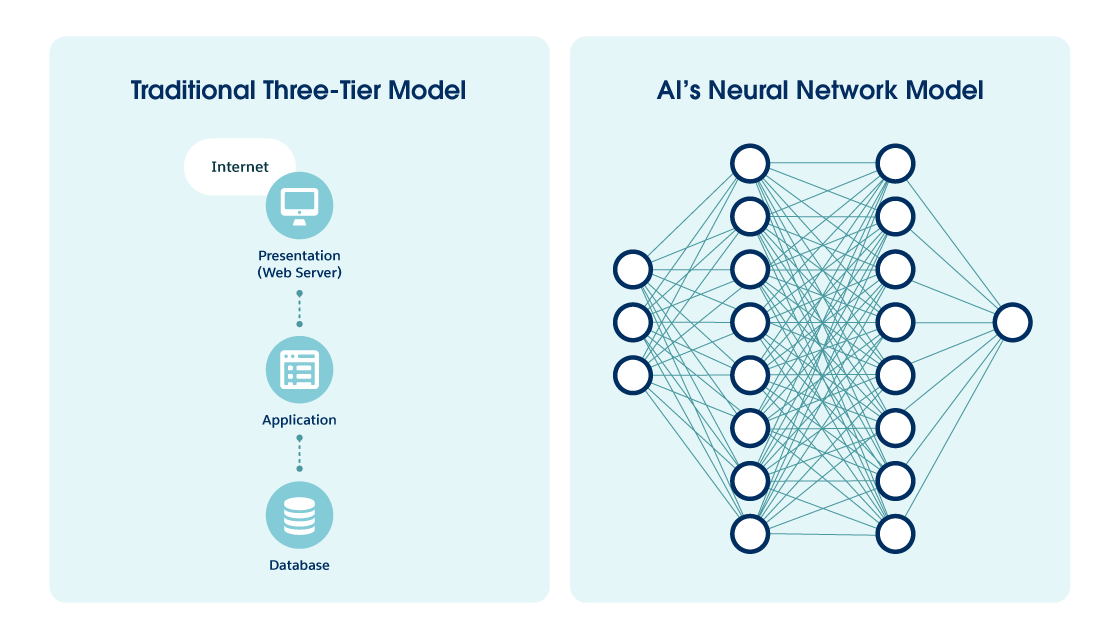

When it comes to securing software and the systems on which they run, understanding software architecture is crucial. The very architecture of software, including the programming languages and tools used to create it, can significantly impact its vulnerability to attacks. It can also influence the types of security measures needed to protect it. To delve deeper into these differences in architecture, let's meet two software engineers: Blake and Avery.

Blake is a talented software engineer who works for a software development company that produces business applications. Blake specializes in traditional software development building the applications we use daily for business, communication, and entertainment. He focuses on creating software that is functional, reliable, and easy to use. Blake's applications are built with three main components: the interface (what the user sees and interacts with), the logic (the rules that determine how the software behaves), and the database (where information is stored). Blake is meticulous in his work, using tools like code editors and debuggers to write clean, efficient code that follows strict rules. Blake is proficient in languages like Java, Python and Javascript. His highest priority is making sure his software applications work as intended and users have a consistent experience. His software changes when he implements planned updates and security patches.

Now meet Avery, a talented AI engineer who works alongside Blake. Like Blake, Avery builds websites and software applications, but her creations feel more responsive and interactive. Her recommendation engines seem to already know your preferences and her chatbots can engage in conversation. This is because Avery's AI systems rely on complex networks of interconnected components and algorithms. In addition to “building blocks” like transformers and attention mechanisms she uses large amounts of data to train her AI models. Avery has more flexibility in her coding style because AI models, especially those that use machine learning, prioritize the data and the learning process over strict adherence to syntax rules. Avery's AI software can adapt and change over time beyond planned updates and patches.

Avery and Blake demonstrate the contrasting structures of AI and traditional software. Both approaches are vital in creating the digital tools we use every day. However, these differences in architecture are critical in understanding how to protect each type of software, a topic we will explore in detail in the next unit.

Implications for Cybersecurity

For cybersecurity professionals, understanding the structural differences between traditional software and AI is crucial for an effective risk management process. Just as a car mechanic's knowledge of an engine's inner workings results in more accurate diagnosis and repair, possessing a deeper understanding of AI- how it works—empowers cybersecurity professionals to use AI proactively to protect organizations against potential vulnerabilities and threats possessing a deeper understanding of AI's internal processes empowers cybersecurity professionals to proactively identify and address potential vulnerabilities and threats.

The evolution from traditional to AI-powered software has created a parallel transformation in cybersecurity tools. Where traditional signature-based systems and endpoint detection once relied on predefined patterns, these tools—now enhanced by AI and machine learning—can adapt to new patterns of behavior on their own. This enables them to perform complex security tasks, such as proactively detecting and neutralizing zero-day attacks in real-time using many techniques such as behavioral analysis. While this shift brings exciting possibilities, it’s important to balance the excitement with a healthy dose of caution and a clear strategy to manage potential risks.

Sum It Up

In this unit, we explored the complex world of artificial intelligence focusing on its protective role in cybersecurity and the risks that need careful management. We compared AI security with AI safety and looked at how AI development differs from traditional software development.

In the next unit, learn about the National Institute of Standards and Technology (NIST) Artificial Intelligence Risk Management Framework (AI RMF) and discuss how traditional security controls differ from those needed for AI systems.

Resources

- External Site: Data Institute: AI in Cybersecurity: Enhancing Protection and Defense

- External Site: Science & Technology: The Global Landscape of AI Security: A Guide for Policymakers in Developing Countries

- Trailhead: Get Started with Artificial Intelligence

- External Site: GeeksforGeeks: AI vs Traditional Programming–What’s the Difference?

- External Site: Cloud Security Alliance: AI Safety vs. AI Security: Navigating the Commonality and Differences

- PDF: Journal of Information Security: Systematic Review: Analysis of Coding Vulnerabilities across Languages

- External Site: Sightline: The First AI/ML Supply Chain Vulnerability Database