Explore Emerging Privacy Trends

Learning Objectives

After completing this unit, you’ll be able to:

- Describe the privacy risks associated with children’s data, biometrics, AdTech, data brokers, and AI.

- Explain how core privacy principles apply to new technologies.

- Identify current US efforts to address emerging privacy challenges.

The Next Frontier of Privacy

Technology is evolving faster than regulation. Every new device, app, or algorithm creates opportunities to improve our lives, but also opens up new questions about how our data is used.

Emerging privacy trends extend the same core principles you learned earlier — transparency, choice, minimization, and accountability — into fields like AI, biometrics, and digital advertising.

Let’s look at how those principles are being challenged and reinforced in today’s digital world.

Children’s Privacy

Children’s personal data is among the most sensitive data collected online. Kids may not fully understand what they’re sharing or how it can be used later. While COPPA sets a baseline for kids under 13, there’s growing momentum to go further. New state laws and policy proposals are calling for things like:

- Age-appropriate design standards, which require platforms to consider children’s best interests first.

- Treating all data from minors as sensitive, requiring stronger protection and limited use.

- Default privacy settings that minimize tracking and data sharing for children.

The goal is simple: create online experiences that protect children by default, not as an afterthought.

Biometric Information

- Biometric data such as fingerprints, facial recognition, voice prints, and iris scans are uniquely personal. Unlike a password, you can’t change your face or fingerprint if it’s compromised.

Several US states have introduced laws focused on this high-risk category:

- Illinois Biometric Information Privacy Act (BIPA) requires notice, consent, and grants individuals the right to sue for violations.

- Texas and Washington have similar laws regarding consent and security, though enforcement mechanisms vary by state.

Key privacy concerns include:

- Consent: Was the individual informed, and did they agree before collection?

- Purpose: Is the biometric data used only for identity verification, or repurposed for surveillance or marketing?

- Bias and fairness: Do AI-based recognition systems work equally well for everyone, regardless of race or gender?

These laws remind organizations that biometric data requires the highest level of care and accountability.

AdTech and Online Tracking

We’ve all been there. You browse for a new pair of sneakers once, and suddenly that exact pair is following you across the internet. That isn't magic—that’s AdTech in action.

Advertising technology uses cookies, pixels, and device IDs to build profiles of people’s online behavior and deliver targeted ads. Tracking users across websites without clear consent raises serious privacy concerns.

Recent trends include:

- The phase-out of third-party cookies in major browsers.

- New state laws requiring opt-out mechanisms for targeted advertising.

- Industry frameworks for consent management and data minimization.

For organizations, this means rethinking how to balance personalization and privacy while still building trust. In addition to how data is collected, lawmakers are also scrutinizing how choices are presented to users.

Dark Patterns

Not all privacy risks come from new types of data — sometimes the risk comes from how choices are presented. Dark patterns are design tricks or misleading interfaces that nudge people into sharing more data, making a purchase, or giving up their privacy rights unintentionally.

If you’ve ever struggled to find an opt-out button, encounter a confusing “Are you sure you want less personalized experiences?” prompt, or clicked through an overly complicated preferences flow, you may have seen a dark pattern in action.

US regulators are taking dark patterns seriously. Choices must be clear, honest, and easy to use; otherwise, they aren’t meaningful choices at all.

Data Brokers

Data brokers collect, analyze, and sell personal data from many sources—often without people knowing. The privacy challenge: most individuals have no idea who these companies are or how to opt out.

Some states now require registration and public disclosure:

- Vermont, California, Texas, and Oregon all maintain public data broker registries.

These laws force brokers out of the shadows by requiring them to register with the state and, in some cases, post clear privacy notices on their websites. The trend is clear: consumers should know who has their data and have the ability to control its use.

Artificial Intelligence (AI)

AI systems learn from large amounts of data to make predictions, classifications, or decisions. When that data includes personal or sensitive personal data, privacy principles may directly apply. Here’s how a few core privacy principles apply when using AI:

Principle |

AI Application |

|---|---|

Transparency |

Clearly disclose when AI is used and what data trains it. Explain in plain language how automated decisions are made. |

Choice & Control |

Give individuals options to opt out of their data being used to train AI models or to request human review of automated decisions. |

Data Minimization |

Collect and retain only data relevant to the AI’s purpose — more data isn’t always better data. |

Accuracy & Fairness |

Ensure datasets are representative and tested for bias to avoid unintended discrimination. |

Accountability |

Maintain human oversight and document AI governance processes to demonstrate responsible use. |

The US government is developing new frameworks to guide ethical AI, like the NIST AI Risk Management Framework. Initiatives like this reinforce the idea that privacy and AI ethics must work together.

Other Emerging Issues

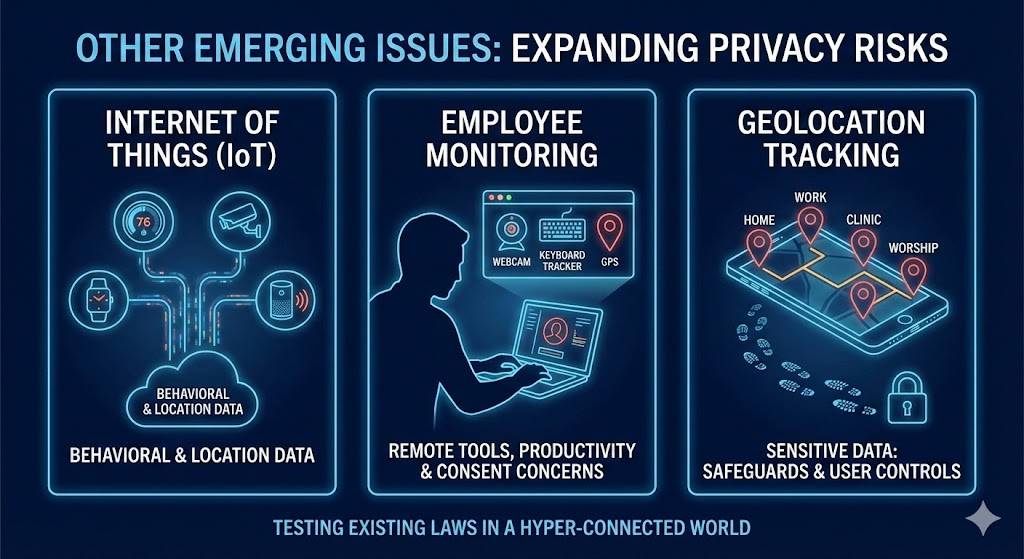

Privacy risks are expanding beyond traditional web data:

- Internet of Things (IoT): Smart home devices and wearables collect constant streams of behavioral and location data.

- Employee Monitoring: Remote-work tools can track productivity, location, and communications, raising concerns about transparency and consent.

- Geolocation Tracking: Location data is one of the most revealing types of personal data. It can show where someone lives, works, worships, seeks healthcare, or spends time, painting a detailed picture of a person’s movements and habits. Because of these risks, many privacy laws treat precise geolocation as sensitive personal data, requiring stronger safeguards, clear notice, and meaningful user controls.

Each of these areas tests how well existing laws protect privacy in a hyper-connected world.

Putting It All Together

Picture a popular, nationally available fitness app that combines workout tracking, social features, and personalized health analysis in one platform. The app's features and operational practices trigger multiple compliance requirements:

Processing Activity |

Trigger + Law |

|---|---|

The phone's camera scans a user's face for logging into the app. |

Collecting biometric data (a digital face scan) triggers the Illinois BIPA. |

A list of users who completed a marathon receives targeted ads for running shoes. |

Sharing data for targeted advertising triggers several applicable state laws (CCPA, Colorado Privacy Act, and others). |

A ‘Kids Mode’ makes exercising fun and gamified. |

Processing data from users under 13 years old triggers COPPA. |

A device monitors and stores heart-rate data. |

Processing user’s health data triggers state-specific health laws (like Washington's My Health My Data Act). |

The app uses AI to analyze user workouts and determine eligibility for life insurance discounts. |

Using AI for automated profiling triggers the Colorado AI Act (and similar state laws). |

Privacy in the modern world means understanding how these elements connect. The same core principles still guide every decision: transparency, choice, minimization, and accountability.

The Future of Privacy

Privacy is no longer a static concept. It’s a constant conversation between technology, law, and ethics. The US privacy landscape will continue to evolve through new state laws, federal debate, and AI governance initiatives.

Organizations that embed privacy into their design processes — often called privacy by design — will stay ahead of compliance and build the trust that sets them apart. After all, privacy isn’t just about protecting data — it’s about protecting people.

Resources

- External Website: Children's Online Privacy Protection Rule (COPPA)

- External Link: Salesforce FERPA and COPPA FAQ

- External Link: Illinois Biometric Information Privacy Act (BIPA)

- External Website: California Consumer Privacy Act (CCPA)

- Trailhead: California Consumer Privacy Act Basics

- External Website: California Privacy Protection Agency (CPPA) - Data Brokers

- External Website: Colorado AI Act

- External Website: NIST AI Risk Management Framework

- External Link: Washington My Health My Data Act