Refine Your Agents Using a Five-Step Testing Strategy

Learning Objectives

After completing this unit, you’ll be able to:

- Explain why it’s important to have a testing strategy before you begin testing your agent.

- Describe the five-step strategy for testing your agents.

Why Follow a Testing Strategy?

Agent testing is the foundation for releasing agents that are reliable and trustworthy. Agentforce Studio’s tools provide a variety of ways to make sure your agent handles the tasks you plan for it to do. As you learned in the last unit, thoroughly testing the many ways a user can engage with an agent can be a challenge. With so many variables at play, it’s wise to have a testing strategy in place before you begin. In this unit, you learn about testing your agent after you’ve refined it in Agentforce Builder.

A Five-Step Strategy to Test Your Agents

The AI Agent Testing Loop is a step-by-step strategy that guides you through fine-tuning your agents so they’re ready for your users. You create test scenarios, select evaluation metrics, and run automated tests. Then you validate the results, and use the feedback to further refine parts of your agent to improve its accuracy and performance.

Step 1: Identify test scenarios and create test data.

After you’ve manually tested a variety of user inputs in the Conversation Preview panel and revised your agent based on those responses, you’re ready to batch test your agent in Testing Center. The first step in batch testing is to identify the types of input you want to test. You can create and upload your own test scenarios by writing them in natural language, or you can ask Testing Center to use AI to generate test cases for you using your agent’s metadata and data it’s permitted to access. Whether you write the test scenarios yourself or ask AI to generate them, it’s helpful to know what makes a good test scenario, so let’s take a look.

When you planned your agent, you defined its scope and capabilities. For example, our reservation agent includes these topics and actions that define some of the jobs and tasks the agent will handle about reservations.

- The Reservation Management topic handles tasks like confirming reservations and itineraries, and making new or modifying existing reservations.

- The Create or Update Reservation action creates a new reservation if one doesn’t exist. Or if a change is made to an existing reservation, it updates the guest’s record.

To help you come up with good test scenarios, in Agentforce Builder, review your agent’s topics, including the Classification Description and Scope fields that describe the capabilities and parameters your agent should operate within. Also review each instruction that directs how the agent performs. Next, write (or generate in Testing Center) input that tests against these details to help make sure your agent acts reliably in each scenario. For example, for the Reservation Management topic that we described, the following could be among your test scenarios.

- I’d like to make a reservation.

- Do you have any openings in July?

- I need to change my reservation.

- I’d like to confirm my reservation.

You need a number of scenarios that provide coverage for all kinds of input to thoroughly test your agent. A good set of test inputs has these attributes.

-

Volume: A sufficient number of test cases to ensure coverage of different scenarios and edge cases.

-

Diversity: A wide range of inputs, contexts, and variations that test your agent’s adaptability across real-world use cases, including inputs that aren’t within the agent’s scope or that can challenge the agent’s guardrails.

-

Quality: Well-defined, accurate, and relevant test cases aligned with the agent’s objectives.

Testing Center uses .csv files to hold its test scenarios. If you write your own test inputs, you’ll create your own .csv file, or if you ask AI to generate test inputs, you can download those tests in a .csv file and edit them. The Test an AI Agent in Agentforce Testing Center video shows you how Agentforce Testing Center works.

Step 2: Set evaluation parameters.

The test cases generated by Testing Center include settings you select as you’re guided through the four screens in the New Test workflow. After providing basic information about your test, like its name and the agent you’re testing, you can choose to include context variables that simulate information about the user or the conversation context. You also select how Testing Center evaluates the agent's performance and quality. It’s a good idea to test all of the evaluation criteria options to ensure your agent is reliable and performing well.

Click Next or Previous below the blue box to view the four steps to generate agent test cases.

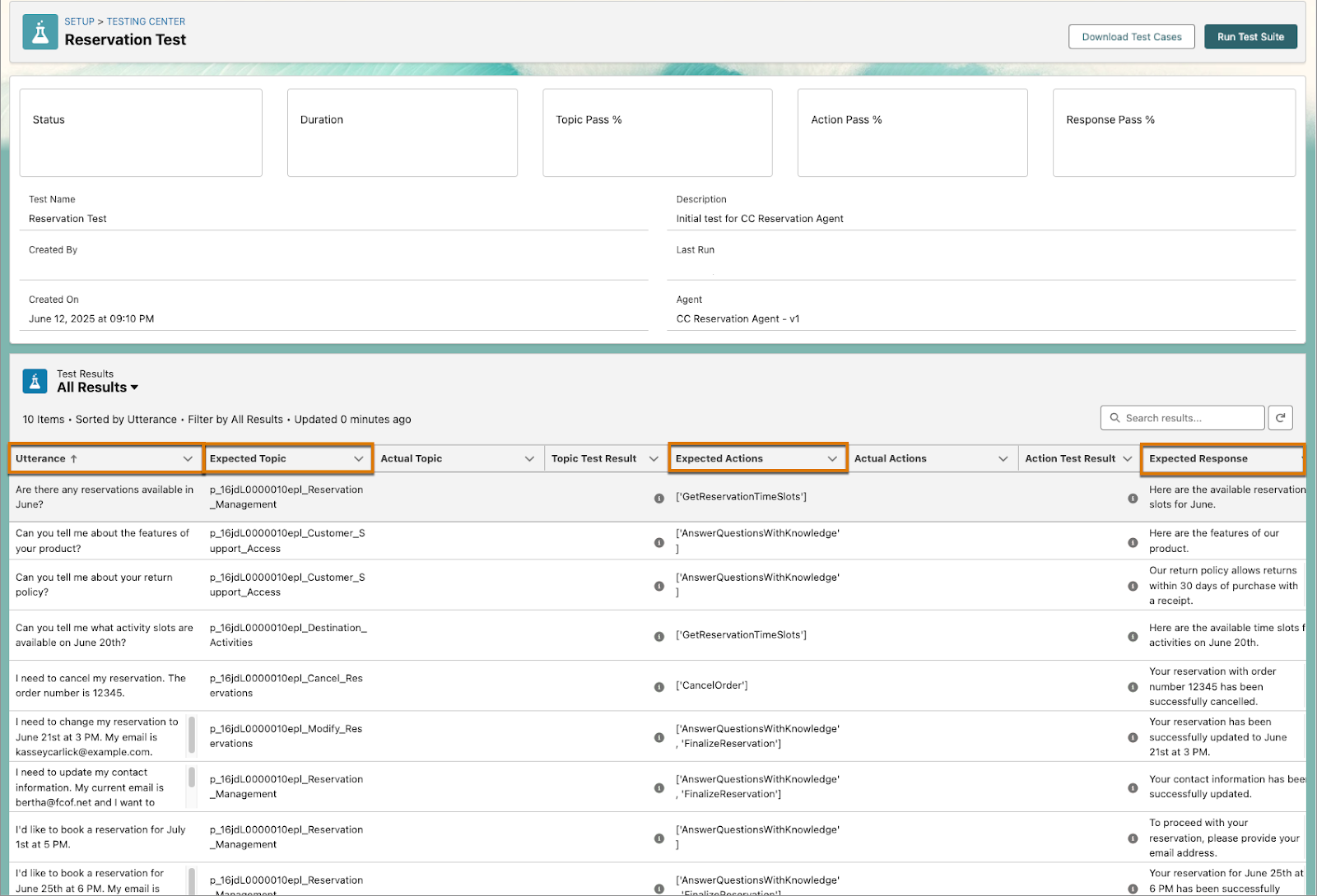

When you complete the New Test workflow and click Generate Test Cases, you see a list of tests that match the criteria you selected. If you uploaded a .csv file of test inputs that you wrote, you’ll see those in your list. A test case validates how the agent processes input, which are referred to as utterances. Each test case includes:

-

Utterance: The input query to the agent

-

Expected Topic: The relevant topic the agent should evaluate

-

Expected Actions: The related actions the agent should execute

-

Expected Response: The desired result described in plain language

Step 3: Run the tests and evaluate the results.

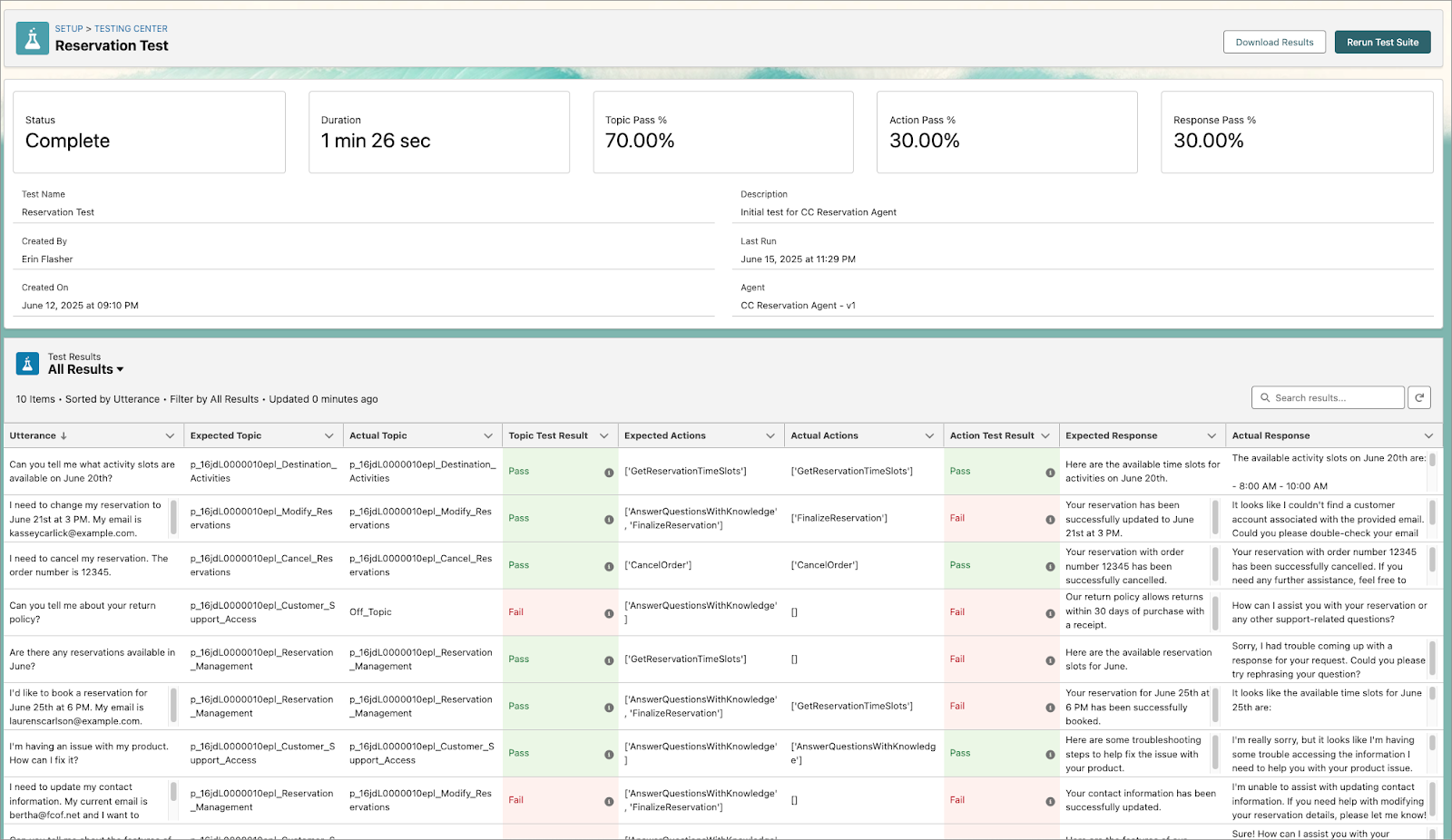

Once you’ve completed the New Test workflow, you click Run Test Suite to run the tests and see how they performed. Review the test results in The Actual Topic, Topic Test Result, Actual Actions, Action Test Result, and Actual Response fields.

Step 4: Validate your results.

While generated tests handle many scenarios, it’s important to have a human review the responses to make sure they align with how the agent should respond, and aren’t producing any toxic or unwanted results. Reviewing the input and responses at this step can also catch missed subtleties, like tone mismatches or context-specific inaccuracies.

Step 5: Review your results and iterate.

Remember that testing is an iterative process. You use test results to refine your topics, actions, and instructions until you reach your acceptable level of accuracy. Testing can also help reveal outdated data your agent has access to, or permissions that need adjusting.

Retest Your Agents

Agents evolve, and so does your business, so retesting for continued accuracy and trust is important. There are many factors that can impact the performance of your agents, including changes to the data your agent uses, permissions, updates to its topics, actions, or prompts, or changes to related product features or business processes. Continued testing will help your agent evolve to stay relevant as your business goals change.

Wrap Up

Testing is the foundation of building AI agents that are reliable, efficient, and trusted. By following a testing strategy, you ensure your Agentforce Agents are trustworthy and helpful to your users.

Quiz Scenario

Maria is an Agentforce administrator at a large hotel chain, Global Stay Resorts. She’s been tasked with refining an AI agent designed to handle customer reservations. The agent has been manually tested in Agentforce Builder, and Maria is now ready to implement a more comprehensive testing strategy to ensure its reliability and accuracy before a full launch. She’s particularly focused on anticipating various user inputs and ensuring the agent’s responses align with the company’s brand voice and business processes.