Explore B2C Commerce Replication

Learning Objectives

- List the three instances a replication impacts.

- List the three ways you can run a replication.

- List three types of data replication that automatically trigger a cache refresh.

- Describe a replication rollback.

Introduction

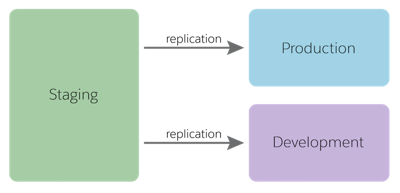

Linda Rosenberg is a new administrator at Cloud Kicks, a high-end sneaker company. Lately, she’s learned how to perform some essential data management tasks in Salesforce B2C Commerce. Now she wants to become an expert at pushing data and code changes from her company’s ecommerce staging instance to its production and development instances.

For example, her developer just created a more streamlined checkout process and added a bonus product discount feature that required changes to configuration settings in Business Manager. Also, Linda just imported product data from an external system to staging for a new spring line. The process she can use to move the code, configuration settings, and data between B2C Commerce instances is called replication.

A replication process is a collection of tasks that you perform to push defined data or code from a source instance to a target instance. The source instance is where the code or data currently resides. The target instance is where you want it to end up. With replication, the source instance is staging and the target instances are development and production.

PIGs and SIGs

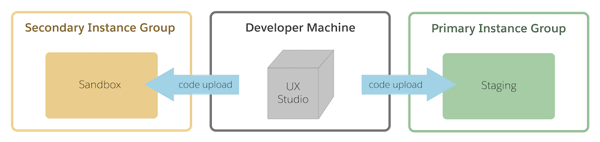

If you completed the Salesforce B2C Commerce Roles & Permissions module, you learned about B2C Commerce architecture. Let’s dive a little deeper now into instances. When a merchant first implements a site, the site typically receives nine instances per realm. This includes:

- Three instances on the primary instance group (PIG):

- Staging

- Development for testing

- Production for deployment

- Five sandbox instances for code development on the secondary instance group (SIG). For scalability, merchants can have up to 47 sandboxes per realm.

- One demo instance.

You perform replication only on a PIG. That’s true for both data and code replication.

Data replication copies data, metadata, and files from staging to either the development or production instance. It functions at two levels.

- Global replication, which includes configuration information and data that applies to the entire organization.

- Site replication, which includes data belonging to one or more specified sites (such as product and catalog data, XML-based content components, and image files).

Code replication transfers code versions from staging to a development or production instance and activates them.

Typically, developers are responsible for moving code from a SIG to a PIG. When the developer finishes coding on their local machine, they upload their code to a sandbox or to staging. They might use Visual Studio Code to upload it, or they might use a code repository (such as Git) to synchronize the work between multiple developers. The code repository is then the source for a release to a staging instance. This type of development environment might have its own build process with an automated upload.

Replication Process

Linda learns that a replication involves the following steps.

- Run a replication from staging to development to test it.

- Test the development instance and view the logs.

- Ensure that search works and that the data is correct on the development system.

- Make necessary changes on the staging instance.

- Replicate from staging to development and test again until everything works great.

- Replicate from staging to production.

- Test everything on production, even if it replicated properly to development.

When Linda configures a data or code replication process, she can choose to run it immediately, schedule it to run later, or assign it to a job. What’s a job? A B2C Commerce job is a set of steps that perform long-running operations such as downloading an import file or rebuilding a search index. We talk about jobs in the Salesforce B2C Commerce Scheduled Jobs module.

A data replication is a long-running process that consists of two phases.

- Copy the data to the target system—The data is not yet visible on the target system.

- Publish—This is quick and makes all changes available at the same time.

She can configure data replication processes to recur daily, weekly, or monthly at a specific time. If you schedule a replication process to run at a later time, the process replicates the system state at run time and not when you created the process.

A code replication transfers code versions from a staging instance to a development or production instance and then activates them.

What Else is Happening?

During a replication, Linda must avoid making other updates. She learns fast that it’s not a good idea to make manual edits in Business Manager on either the source or the target instance while a replication is running. Editing during this process can impact data consistency.

She also makes sure that no jobs are running on the target instance during replication and avoids data replications during a B2C Commerce standard maintenance window. If a recurring data replication process fails, it won’t run again automatically.

Page Cache Impact

Both code and data replication have page cache implications. Some replication tasks automatically invalidate and refresh the cache. Some tasks don’t do this automatically, so Linda must always clear the cache manually after running those. Let's see the steps she takes to manually clear cache.

To access Business Manager, you must have a B2C Commerce implementation. In this module, we assume you are a B2C Commerce administrator with the proper permissions to perform these tasks. If you’re not a B2C Commerce administrator, that’s OK. Read along to learn how your administrator would take these steps in a staging instance. Don't try to follow our steps in your Trailhead Playground. B2C Commerce isn't available in the Trailhead Playground. If you have a staging instance of B2C Commerce, you can try out these steps in your instance. If you don't have a staging instance, ask your manager if there is one that you can use.

To access and invalidate the cache:

- Open Business Manager.

- Select Administration > Sites > Manage Sites > sitename.

- Click the Cache tab.

- Select the Staging instance.

- In the Cache Invalidation section, click Invalidate to invalidate the Static Content Cache and Entire Page Cache for Site or the Entire page cache for Site. This takes effect immediately.

Clearing the page cache can create a heavy load on the application servers. That means that Linda should clear the page cache manually only if it’s necessary, and avoid clearing it during high traffic times. For example, if Linda makes some minor updates, instead of clearing the cache immediately, she can wait for her scheduled cache clearing at night. If the changes involve an important security feature or a critical product or promotion update, her decision is different.

No matter when she clears cache, a clear cache command can take up to 15 seconds to reach the web server. She probably won’t see the cache update immediately. To ensure successful replications, she evaluates the change scope and tries to make the changes as small as possible.

For both automatic and manual cache refreshes, B2C Commerce delays refreshing all pages in a production instance for 15 minutes. This ensures load distribution across application servers. On non-production instances, page cache refreshes immediately.

Code Replication

The last step of the code replication process from staging to production automatically clears the cache.

Data Replication

The last step of the data replication process automatically invalidates and refreshes the cache by default, except in certain cases. Linda can configure a replication process to skip automatic cache clearing. However, she must do this with caution, because it can lead to inconsistent data on the storefront, which can be difficult to troubleshoot.

Let’s look at an example where Linda would choose to skip automatic cache clearing. B2C Commerce caches product description pages for 24 hours. Linda schedules cache clearing for the product page for the following night and then notices that several product prices are incorrect on the production instance. She asks a merchandiser to correct the prices in Business Manager on the staging instance, and then she replicates the changes to production using a process that skips page cache clearing because she already scheduled it.

Doing it this way keeps the price data in sync on staging and production and ensures that the correct prices appear in the basket (which isn’t cached). The storefront’s product description pages show the old, incorrect prices until the scheduled page cache clearing occurs.

Skipping the automatic cache refresh means that she accepts the trade-off of incorrect prices on the product description pages in exchange for avoiding the performance hit of a production cache refresh. What’s most important is that baskets on the production instance reflect correct prices in sync with the staging instance.

These are some other page caching considerations.

| When you replicate... | B2C Commerce... |

|---|---|

| Site-specific data | Clears the page cache for the affected site, unless you are only replicating coupons, source codes, Open Commerce API settings, or active data feeds. |

| Global data | Clears the page cache for all affected sites, unless you are only replicating geolocations or customer lists. |

| Catalogs, sites, or price books | Automatically clears cache. |

| Promotions or static content | Does not automatically clear cache. |

| Catalogs | Selectively clears the page cache of affected sites, using the rules described in the following table. |

Catalogs are a special case, as follows.

| When you replicate... | B2C Commerce clears cache from... |

|---|---|

| All catalogs for all sites of an organization | All sites of the organization. |

| A single catalog that’s assigned to one or more sites | The sites to which the catalog is assigned. |

| A product catalog that isn't directly assigned to a site but serves as a product repository for one or more site or navigation catalogs | The sites, determined programmatically, that offer products from the product catalog. |

Best Practices

Linda has a pretty good handle on replication now. But before she sets up any replication processes for Cloud Kicks, she carefully studies the following best practices.

- Identify dependencies between different data replication groups. For example, if you have campaigns that use source codes and coupon codes, replicate source codes and coupons along with or prior to replicating campaigns.

- Always rebuild the search indexes and make sure that the process is complete before replicating them. When replicating search indexes, disable incremental and scheduled indexing, and stop other jobs. Replication fails when an index is being rebuilt or modified.

- Don’t run multiple replication processes at the same time.

- Limit the number of users who have privileges to perform data replication.

- Store static content in folder structures that do not allow more than 1,000 files in the same folder. If you store more that 1,000 files in a folder, access and replication times increase significantly, even if no file is changed.

- Copy an existing replication process when possible, instead of creating a new process from scratch.

- Don’t edit cartridge paths or code directly on a production instance. This can cause unexpected behavior, such as multiple versions of pages. Always replicate code and preferences from staging to production.

Roll Back a Replication

Sometimes you have to undo a replication. You can do this by running the same replication again with the replication type set to Undo. This restores the target instance to its previous state. However, you can only roll back the most recent data or code replication.

Data and code replications don’t affect each other's rollbacks. For example, if you run a data replication and then a code replication, you can still undo both replications.