Evaluate Your Impact

Learning Objectives

After completing this unit, you’ll be able to:

- Understand the basics of a few impact-evaluation methods.

- Avoid common traps and biases in data analysis.

Evidence and Evaluation

Up to this point, you’ve learned about planning for impact management, choosing indicators, and developing skills and a culture of continuous evidence building.

In this unit you learn the basics of how to evaluate impact, avoid common biases, and choose the best evaluation method for your organization.

Impact Evaluation Methods

Whichever indicator you use to evaluate your outcomes, you must start from baseline data. Remember that you’re trying to measure change, so you need a starting data point to compare to what happens after your activities and programs.

Baseline data helps show changes over time and the extent of change. Using standard indicators or common benchmark data for large groups—for example, from national or regional government databases—can provide a good baseline to measure change. Check out Measure Your Strategy with Indicators in Salesforce Help to learn how to use Outcome Management to set baseline values on indicators in Salesforce.

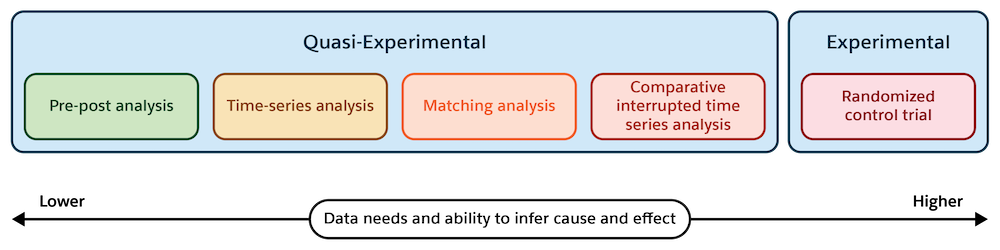

After you establish a baseline, you can measure change in two ways: experimental and quasi-experimental. What’s the difference?

As the graphic shows, experimental methods such as randomized control trials show a closer cause-and-effect relationship between activities and outcomes, but are time-consuming, expensive, and require special skills. Quasi-experimental methods are less resource-intensive and scientific, but often demonstrate a more directional relationship between cause and effect.

The lower cost of quasi-experimental methods such as pre-post, time-series, matching, and comparative interrupted time series analyses make them a good starting place if you’re new to impact management.

Check out the following interactivity to learn more about each method.

Common Problems and Biases to Avoid

Whichever evaluation method you choose, data analysis can be hard work. Data problems and cognitive biases that affect outcome interpretation can make the work even more difficult.

Data problems stem from using invalid indicators or assuming they represent more than they really do. Consider the cause-and-effect relationship between your activities and outcomes, and look for narrow measurements that directly connect them. For example, imagine a program that advocates for better health outcomes through more and better handwashing. Instead of focusing on all long-term health outcomes, focus on decreases in disease transmitted through touch. You may not get your indicators perfectly right at first, but you can work to update them as you learn more. Remember, impact management is a process.

Watch that you don’t also fall into cognitive bias traps. Cognitive biases are problems in your logic when anyone processes information. These biases can cause you to unintentionally make conclusions that are easier to understand or bend evidence to fit your beliefs.

Here are some common cognitive biases to avoid.

-

Confirmation bias causes you to focus on an existing belief and disregard conflicting evidence. If you believe your program is making a significant difference, you might ignore or discount evidence that indicates it isn’t.

-

Recency bias causes you to overemphasize recent events and outcomes. For example, imagine that a group of participants recently completed a program with great success. The previous five groups had poor outcomes, and nothing changed about the program. Recency bias can cause you to overlook the majority of evidence recommending a change to the program.

-

The framing effect influences decisions based on how information is presented. If you learn that a program has a 10% success rate, you may be more likely to support it than if you were told it has a 90% failure rate. Don’t frame information in a way that’s too positive or negative to change how your activities are viewed.

Can you ever completely eliminate biases like this from your work? No, we’re all only human. With that said, being aware of biases while working with your data or involving an impartial third party can help minimize their impact.

Right-Sized Analysis for Your Organization

Remember, again, that impact management is an ongoing and comprehensive practice—it isn’t a one-size-fits-all solution. How you measure your outcomes depends on your organization’s mission, circumstances, and experience.

Yes, a scientific RCT is the gold-standard of demonstrating the cause and effect of your activities and outcomes, but it’s not a must-have for everyone. Consider the right level of analysis for your organization based on your scale, resources, reporting requirements, and other factors.

The key to effective impact management is dedication to gathering evidence and making data-based decisions to increase your impact. Take it step by step and use your theory of change, learning agenda, and strategic evidence plan as your guides. Collect the baseline data to get started, then try a pre-post analysis, implement changes, and follow the results.

As you grow with impact management, you’ll realize better outcomes and greater efficiencies. Best of all, you’ll make bigger changes in the world.