Evaluate a Model

Learning Objectives

After completing this unit, you’ll be able to:

- Explain what a model is and where it comes from.

- Describe why you use model metrics to understand model quality.

Models, Variables, and Observations

To review what you learned earlier in this module, a model is the sophisticated custom mathematical construct based on a comprehensive statistical understanding of past outcomes. Einstein Discovery generates (trains) a model based on the data. Einstein uses the model to produce diagnostic and comparative insights. After you deploy a model into production, you can use it to derive predictions and improvements for your live data (more on that later!).

Variables

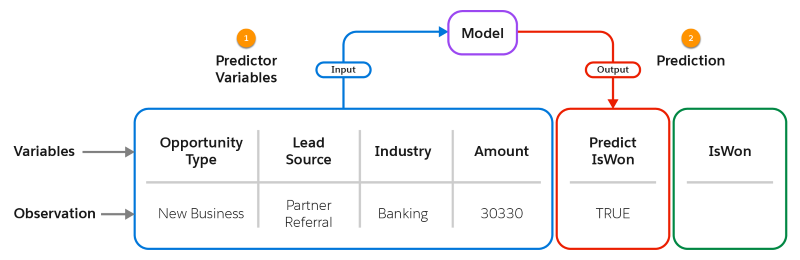

So let’s explore models further. First, it’s helpful to know that a model organizes data by variables. A variable is a category of data. It’s analogous to a column in a CRM Analytics dataset or a field in a Salesforce object. A model has two kinds of variables: inputs (predictor variables) and outputs (predictions).

Observations

Predictions occur at the observation level. An observation is a structured set of data. It’s analogous to a populated row in a CRM Analytics dataset or a record in a Salesforce object.

For each observation, the model accepts one set of predictor variables as input (1) and returns a corresponding prediction (2) as output. If requested, the model can return top predictors and improvements as well. In this figure, the actual outcome (IsWon) is not yet known.

Models Are Everywhere

Models are not unique to Einstein Discovery or Salesforce. In fact, predictive models are used extensively around the world—across industries, organizations, disciplines—and they’re involved in so many aspects of everyday life. Data scientists and other specialists apply their formidable talents to designing and building high-quality models that can generate very accurate and useful predictions.

A common challenge for many organizations, however, is that—once built—a well-crafted model can be difficult to implement into production environments and to integrate seamlessly with the operations they are designed to benefit. With Einstein Discovery, you can now quickly operationalize your models: Build them, deploy them into production, and then start getting predictions and making better business decisions right away using live data. You can even operationalize externally built models that you upload into Einstein Discovery.

What’s a Good Model?

Naturally, if you’re going to be basing business decisions on the predictions that your model produces, then you want a model that’s going to be really good at predicting outcomes. At a minimum, you want a model that does a better job at predicting outcomes than what you have in the absence of a model, which is simply random guessing that results in data-deprived decision making!

So, what makes a model good? Broadly speaking, a good model meets your solution requirements by producing predictions that are sufficiently accurate to support your outcome improvement goals. Simply put, you want to know how closely a model’s predicted outcomes match up with actual outcomes.

To help you determine how well your model performs, Einstein Discovery provides model metrics that visualize common measures of model performance. (Data scientists recognize these as fit statistics, which quantify how well your model’s predictions fit the real-world data.) Keep in mind that models are abstract approximations of the real world, so all models are inevitably inaccurate to some degree. In fact, a “perfect” model should raise your suspicions, not your hopes (more on this later).

When thinking about models, it’s helpful to consider the frequently cited statement attributed to statistician George Box: “All models are wrong, but some are useful.”

So let’s learn how useful your model can be.

Explore Model Performance

In Einstein Discovery, model performance reveals quality measures and associated details for a model. Model performance helps you evaluate a model's ability to predict an outcome. Model performance metrics are calculated using the data in the CRM Analytics dataset used to train your model. For every observation in the dataset that has a known (observed or actual) outcome, Einstein Discovery calculates a prediction and then compares the predicted outcome with the actual outcome to determine its accuracy.

Important: Einstein Discovery provides lots of different metrics to describe the model that was built for you—in fact, way too many to cover in this module. Don’t worry, you don’t need to know all—or even most—of them. We cover just the most important ones here.

By providing a comprehensive set of metrics, Einstein Discovery makes your model totally transparent, with many ways in which to evaluate performance from different perspectives. That way, you can assess model quality using the metrics that make the most sense for your solution, including those not covered in this unit.

Einstein Discovery also helps you interpret these metrics without needing to understand all the nuances and mathematics involved in calculating them. If you want to learn more about a particular metric or screen not covered in this unit, click the info bubble  or Learn more

or Learn more  .

.

Model Performance Overview

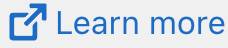

The model performance is the first page you see when you open your model. Use it to assess your model’s quality.

Note: Numeric and binary classification use cases have different model metrics. In this module, we focus on the model metrics to maximize isWon, a binary classification use case.

The left panel (1) displays:

- Navigation to Model sections

- Data Insights and Bookmarks

- Links to other actions

The Path to Deployment panel (2) displays:

-

Review Model Accuracy: For binary classification solutions, the Area Under the Curve (AUC) statistic is often where data scientists look first to assess model quality. Our goal is to have an AUC that is greater than 0.5 (random chance) and less than 1.0 (a perfect prediction, which usually indicates a data leakage problem). Our model has an AUC value of 0.8183, which is in the good range.

Note: A comparable metric for numeric models is R^2, which measures a regression model’s ability to explain variation in the outcome. R^2 ranges from zero (random chance) to one (perfect model). In general, the higher the R^2, the better the model predicts outcomes.

-

Set a Threshold: For binary classification models, the threshold is the value that determines whether a prediction is classified either true or false based on the prediction score, which is a number between 0 and 1. In our example, if the prediction score is 0.4654 or higher, then the predicted outcome is TRUE. A deep dive into thresholds is outside the scope of this module. Suffice it to say that, depending on your solution requirements, you can tune your model to favor one outcome over another.

-

Assess Deployment Readiness: Einstein Discovery performs a model quality check and surfaces detected issues here. In your example, there are no data alerts because you already resolved them in a previous unit.

The Training Data and the Model panel (3) displays:

-

Distribution of the Outcome Variable: Shows how many TRUE and FALSE observed values (actual outcomes) are in the training data.

-

Top Predictors: Shows the predictor variables with the highest correlation to the outcome. In our sample data, Opportunity Type has the highest correlation, followed by Industry.

Prediction Examination

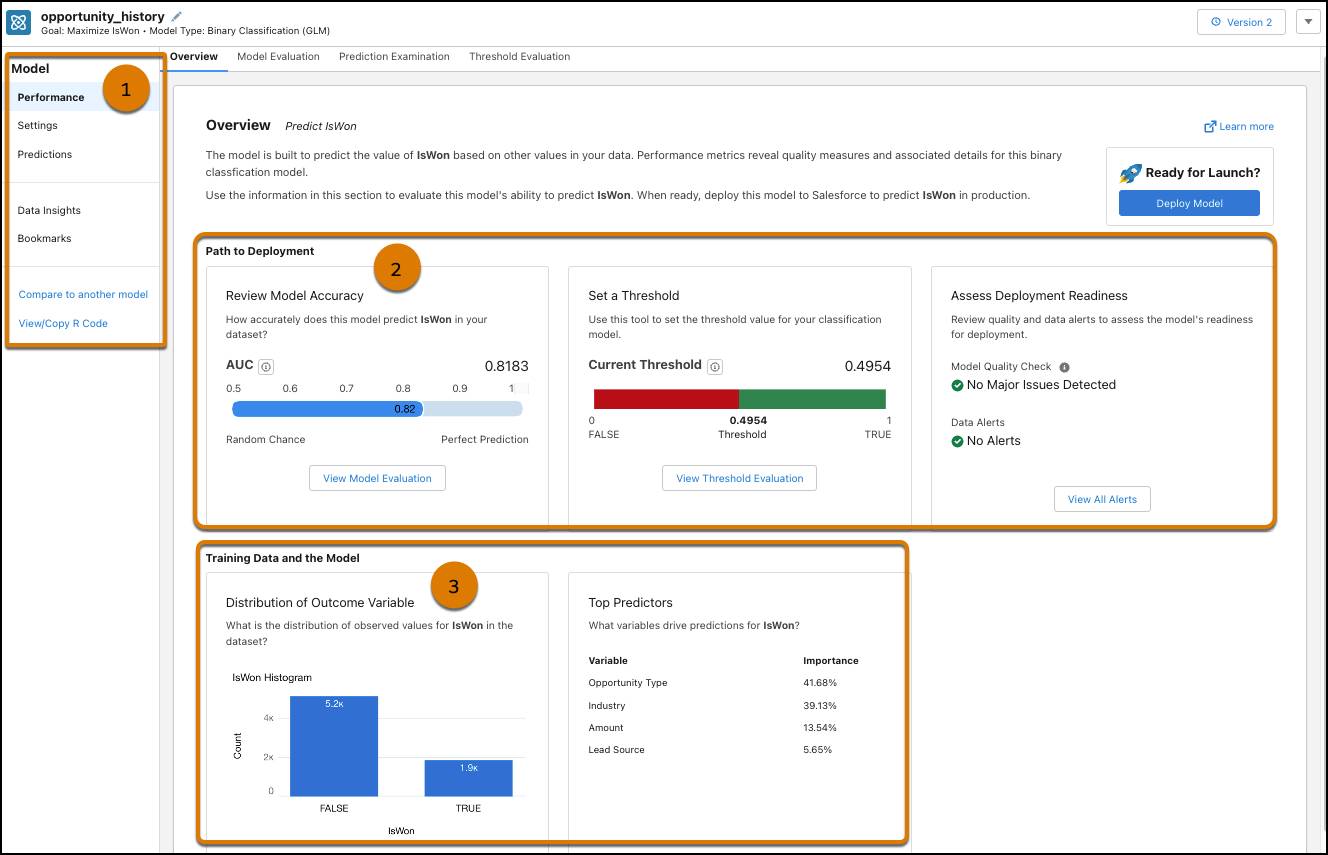

Click the Prediction Examination tab.

The Einstein Prediction panel on the right compares, for the selected row in the training data, the predicted outcome with the actual outcome, as well as the top factors that contributed to the predicted outcome. Click any row to update this panel.

This screen is like a road test: It’s a helpful preview of how the model would predict outcomes after it’s deployed. AUC provided an aggregate measure of the model, but this screen lets you drill down and analyze your models’ predictions interactively.

Note: Einstein Discovery takes a random sampling of the data in the dataset, so the data on your screen will differ from this screenshot.

Explore Predictions and Improvements

Let’s leverage the power of Einstein Discovery to predict into the future. In this section, put Einstein to work by selecting a scenario and having Einstein calculate statistically probable future outcomes and suggestions on how to improve the outcome.

Note: This unit covers using your model to explore what-if predictions and improvements. Later, you learn how to deploy your model into Salesforce to get predictions and improvements on your current records.

On the left navigation panel, click Predictions.

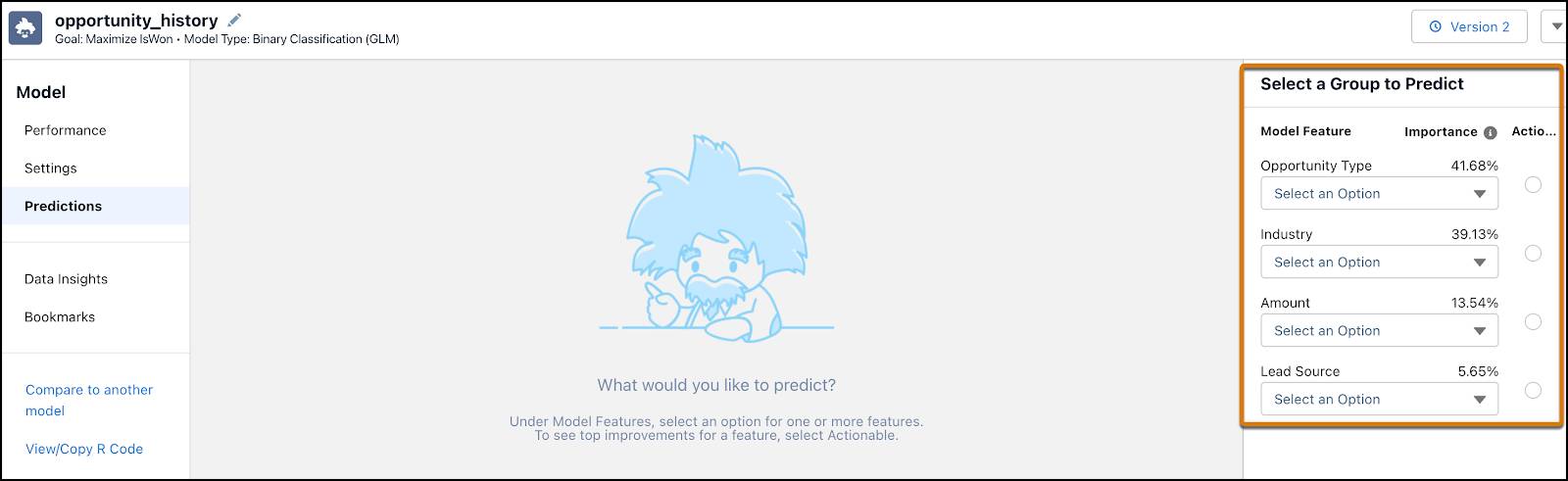

The panel on the right is where you select inputs into the model.

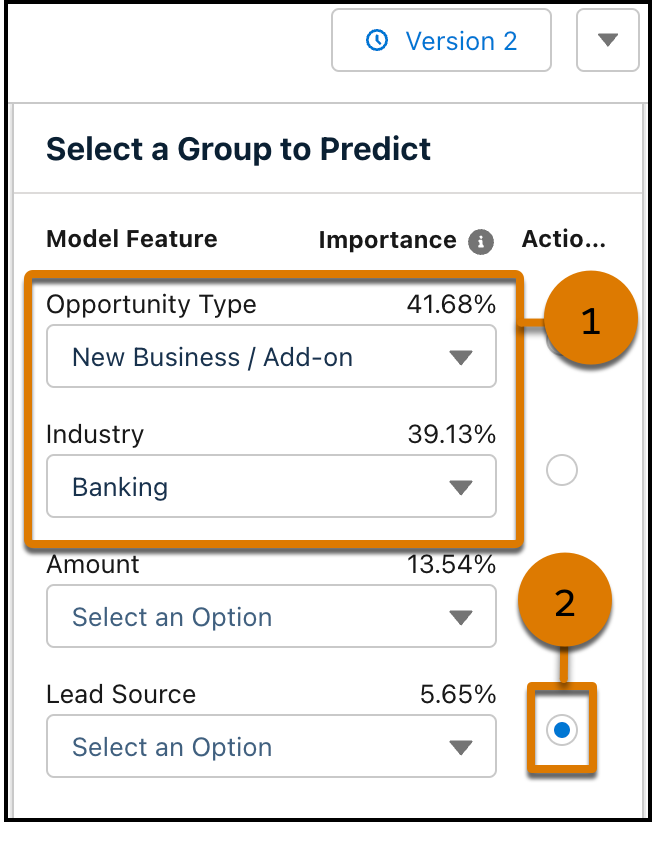

Under Select a Group to Predict, for Opportunity Type, select New Business / Add On, and for Industry, select Banking (1). Select the Actionable button next to Lead Source (2) to see improvements.

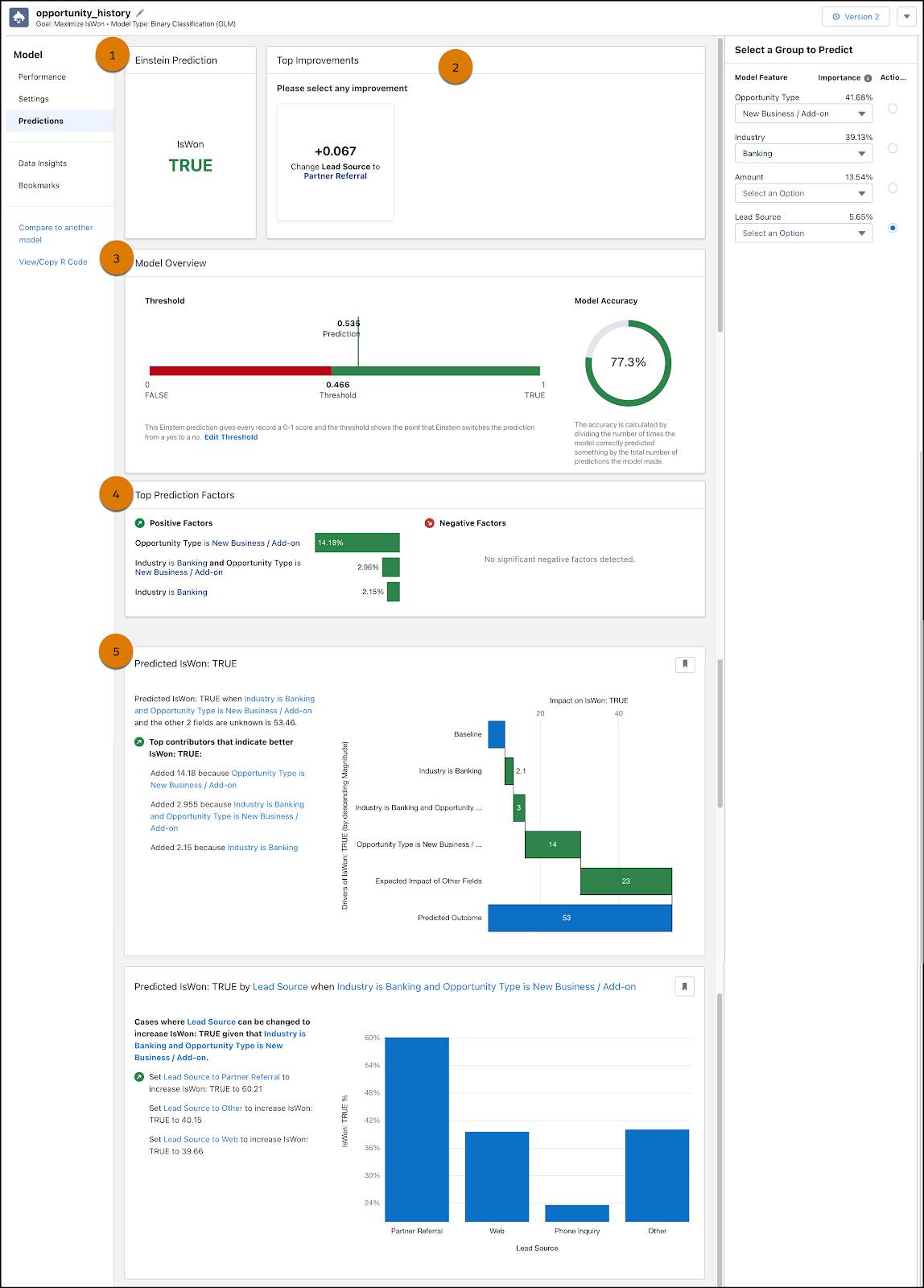

On the main page you see these panels (you might need to scroll down to see everything).

-

Einstein Prediction (1) shows the prediction score for your selections. In this example, the predicted outcome is IsWon: True.

-

Top Improvements (2) shows suggested actions that you can take to improve the predicted outcome. In this example, changing the lead source of the opportunity to partner referral improves the predicted outcome by 0.067.

-

Model Overview (3) shows quality metrics for your model.

-

Top Prediction Factors (4) shows explanatory variables, favorable and unfavorable, that are most strongly associated with the predicted outcome. In our example, Opportunity Type is New Business / Add-on improves the predicted outcome by 14.18%.

-

Insights (5) shows additional insights associated with your selection.

What’s Next?

Now that you’ve evaluated the model, let's take a look at the data insights.

Resources