Apply the Digital Trust Framework

Learning Objectives

After completing this unit, you’ll be able to:

- Explain the digital trust framework.

- Build trustworthy software systems by applying the framework.

Knowledge Check

Let's review the phases of the Secure Development Lifecycle from the last unit and then build upon it as we discuss how to apply the digital trust framework to these phases.

The knowledge check below isn’t scored—it’s just an easy way to quiz yourself. To get started, drag the description in the left column next to the matching category on the right. When you finish matching all the items, click Submit to check your work. To start over, click Reset.

Great job! Now let's move on to learning more about the digital trust framework.

The Digital Trust Framework

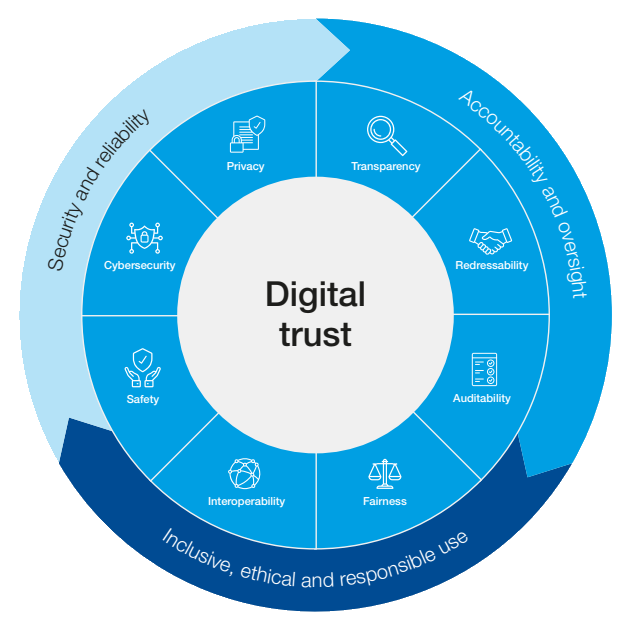

In response to the decreasing trend of trust, the World Economic Forum created The Digital Trust Framework, a collection of principles and practices that prioritize the need for businesses to earn digital trust and make responsible decisions about technology use.

The framework emphasizes that trust is not solely about the technologies that are developed or implemented, but rather about the decisions made by leaders. To improve public trust in new technologies and the companies responsible for them, the framework outlines the following crucial areas.

Dimension |

Definition |

|---|---|

|

Cybersecurity

|

Cybersecurity is about protecting digital systems and data from unauthorized access and harm. It helps prevent damage and ensures that data and systems remain confidential, integral, and available. |

|

Privacy

|

Privacy means individuals expect control over personal information, while organizations should design data processing methods that respect autonomy by informing individuals about the collection, use, and control of their information. |

|

Transparency

|

Transparency means openly sharing how digital operations and data are used, balancing information between organizations and stakeholders. It shows the organization is acting in the individual's interest by making their actions known and understandable. |

|

Safety

|

Safety encompasses efforts to prevent harm (emotional, physical, psychological) to people or society from technology uses and data processing. |

|

Fairness

|

Fairness means achieving equitable outcomes for all stakeholders, taking into account relevant circumstances and expectations, while taking steps to avoid unfair outcomes. |

|

Interoperability

|

Interoperability means that information systems can connect and share information with each other easily and without any unnecessary barriers or restrictions. |

|

Auditability

|

Auditability means that an organization and third parties can review technology, data processing, and governance activities and results. This confirms the organization's commitments and demonstrates their intent to follow through on those commitments. |

|

Redressability

|

Redressability means affected parties can seek recourse for negative impacts from technological processes, systems, or data uses. Trustworthy organizations have mechanisms to address unintended harm, making affected individuals whole. |

Applying the Framework

Let’s review a scenario that applies the dimensions of the World Economic Forum's digital trust framework along with the SDLC phases to build a trustworthy software system.

Scenario

A healthcare technology startup is developing a mobile app that helps patients manage their chronic conditions by tracking their symptoms, medications, and doctor appointments. The company’s leadership wants to ensure the app is trustworthy, secure, and follows best practices for protecting patient data so they decide to follow the World Economic Forum's digital trust roadmap.

Here’s how the company’s team of software developers apply each dimension of the digital trust framework to the SDLC process. Be sure to give attention to the unique SDLC tools, methods and industry standards used for each dimension.

CYBERSECURITY

Planning |

Developers use threat modeling and risk assessments to identify and prioritize potential security risks. |

|---|---|

Requirements |

They use OWASP (Open Web Application Security Project), to ensure that the app's security requirements meet industry standards. |

Design |

They design security controls like encryption and access controls into the app to ensure data is protected from unauthorized access. |

Implementation |

Developers implement secure data storage techniques (for example, AES and RSA algorithms to encrypt data stored on the app’s servers and on the user's device) and secure data transfer protocols (for example, Virtual Private Network [VPN]). |

Testing |

Developers conduct security testing to ensure that the app’s security controls are functioning as intended. They use the iterative DevSecOps process to identify and address potential security vulnerabilities and conduct regular code reviews. |

PRIVACY

Planning |

Developers use privacy impact assessments to identify and prioritize potential privacy risks. They identify the types of information that the software system will collect, process, and store like name, age, patient chronic conditions, device information, chat history, and behavioral information. |

|---|---|

Requirements |

Developers define the app's privacy requirements in a way that respects user autonomy and informs individuals about the collection, use, and control of their personal information. They use the General Data Protection Regulation (GDPR) to ensure the app's privacy requirements meet industry standards. |

Design |

Developers design the app’s features and functions to minimize the collection and use of personal information, and to provide individuals with options for controlling their personal information. They use privacy-by-design and data minimization to ensure the app collects and uses only the data necessary for its intended purpose. |

Implementation |

Developers implement privacy into the app by using encryption and access controls to protect user data. |

Testing |

They conduct privacy testing to ensure that the app’s privacy controls are functioning as intended. They use tools like privacy impact assessments and privacy-focused penetration testing to identify and address potential privacy vulnerabilities. |

TRANSPARENCY

Planning |

Developers define transparency objectives for the app and identify the information that will be made available to users. This includes deciding on the types of data that will be collected, how it will be used, and who will have access to it. |

|---|---|

Requirements |

Developers ensure transparency by defining the app’s transparency requirements in a way that promotes openness and honesty about how the app collects, uses, and shares user data. They use transparency standards and guidelines like the International Organization for Standardization (ISO) 26000 to ensure that the app's transparency requirements meet industry standards. |

Design |

They use user-centric design principles to ensure that the app is easy to use and understand, even for individuals who may have limited technical knowledge. Developers also make it easy for users to access and manage their data by providing resources and support including a user manual, FAQs, and customer support channels. |

Implementation |

Developers implement transparency controls and measures, such as privacy notices and enable user data access and deletion. They also implement privacy settings that allow users to control what information is shared and with whom. |

Testing |

Developers conduct user testing to ensure individuals can easily access and understand the information provided in the app and easily control their data settings. |

SAFETY

Planning |

Developers conduct a risk assessment to identify potential risks related to user data security (for example, data breaches, data theft), adverse effects of medication interactions (such as recommending a medication that could cause a harmful interaction), or unreliable symptom tracking (for example, delayed treatment, misdiagnosis). They use tools like Failure Mode and Effects Analysis (FMEA) to identify potential safety hazards and develop a risk mitigation plan. |

|---|---|

Requirements |

Developers ensure the app’s safety requirements are defined by providing accurate and reliable symptom tracking, ensuring secure data storage, and avoiding any features that may be harmful to users. |

Design |

They incorporate safety into the app’s design by adding features that promote safety, such as clear medication dosage tracking, reminders for doctor appointments, and emergency contact options. |

Implementation |

Developers can implement safety into the app by using industry-standard safety controls and measures. They use secure data storage techniques, encryption, and other measures to ensure that patient data is kept secure and confidential. They also provide error handling mechanisms (for example, input validation, graceful degradation, crash reporting) to prevent the app’s performance from posing any safety risks to patients. |

Testing |

Developers conduct penetration testing and vulnerability assessments to identify potential security vulnerabilities and address them before the app is released to the public. |

FAIRNESS

Planning |

Developers identify potential biases in the app’s design using demographic research, stakeholder analysis, diversity and inclusion assessment, and design thinking to understand the intended audience and ensure the app’s functionality and features are fair and relevant to all users. |

|---|---|

Requirements |

They use user stories and user personas to ensure the app’s requirements are defined in a way that addresses the needs and preferences of all patient groups. They also use the Web Content Accessibility Guidelines (WCAG) 2.1 to ensure that the app is accessible to users with disabilities. |

Design |

Developers use algorithmic fairness and bias detection to design fairness into the app. They also use design thinking, user-centered design, and prototyping to incorporate fairness into the app’s design. Data analysis tools like Explainable AI (XAI) techniques are used to identify potential biases in the app’s data analysis algorithms. |

Implementation |

Developers implement data anonymization to protect user privacy and ensure that personal information is not used to discriminate against specific individuals or groups. They can also use algorithms and machine learning models that are regularly monitored and tested for potential biases and corrected as necessary. |

Testing |

Developers conduct audits and reviews of the app’s data processing methods to ensure that they are fair and unbiased. |

INTEROPERABILITY

Planning |

Developers identify the different systems and platforms that the mobile app will need to interact with including electronic health record systems, pharmacy systems, and other healthcare information systems. They also ensure that the app adheres to industry standards and regulations for interoperability. |

|---|---|

Requirements |

They use Fast Healthcare Interoperability Resources (FHIR) to ensure that the app’s data can be exchanged with other healthcare systems. |

Design |

Developers design the app to be modular and scalable so they use application programming interfaces (APIs) and software development kits (SDKs) to facilitate integration with other systems. |

Implementation |

Developers implement interoperability into the app by using industry-standard data exchange formats, such as Health Level Seven International (HL7), to ensure the app’s data can be shared with other healthcare systems. |

Testing |

Developers use the Electronic Health Record (EHR) simulators to test the app’s ability to exchange data with different healthcare systems. |

AUDITABILITY

Planning |

Developers use data flow diagrams and data governance frameworks to identify potential auditability challenges and requirements. |

|---|---|

Requirements |

Developers ensure auditability by defining the app’s data and governance requirements in a way that promotes easy auditing. They use the Health Insurance Portability and Accountability Act to ensure the app’s data and governance processes meet industry standards. |

Design |

They incorporate logging and monitoring into the design to enable easy tracking of data and governance processes to support auditing. |

Implementation |

To ensure the app’s data can be easily audited, developers implement JavaScript Object Notation (JSON). |

Testing |

Developers test the app's auditability with vulnerability scanners and penetration testing frameworks to identify potential security vulnerabilities and auditability challenges. |

REDRESSABILITY

Planning |

Developers use stakeholder analysis and user personas to identify potential harm and requirements for addressing potential user harm. |

|---|---|

Requirements |

They use the Organization for Economic Cooperation and Development (OECD) Guidelines for Multinational Enterprises to ensure the app’s requirements align with best practices for social responsibility and human rights. |

Design |

They design mechanisms for users to seek recourse for negative impacts from the software system, such as support tickets and feedback forms. |

Implementation |

They implement the redressability mechanisms in the software system and ensure that they are easily accessible to users. |

Testing |

Developers test the app’s redressability by conducting user acceptance testing and quality assurance testing to ensure the app meets user requirements and expectations. They also use automated testing frameworks to identify potential issues and vulnerabilities. |

The healthcare technology startup has taken a comprehensive and proactive approach to ensure its mobile app is trustworthy, secure, and follows best practices for protecting patient data. The startup has prioritized digital trust. It has implemented a secure SDLC process and applied the Digital Trust Framework by building privacy policies, data minimization techniques, transparency practices, accountability measures, fairness design, reliability measures, interoperability standards, auditability, and redressability mechanisms into a major customer-facing software system.

All of these efforts help to build and sustain patient trust and confidence in the app, ultimately leading to increased revenue and enhanced brand reputation for the startup and better healthcare outcomes for those managing chronic conditions.

Sum It Up

In this module, you’ve been introduced to the Software Development Lifecycle and Digital Trust Framework. You’ve learned how they complement each other, and how to apply them in an effort to prioritize digital trust when building and providing digital services and products.

Interested in learning more about cybersecurity roles and hearing from security professionals? Check out the Cybersecurity Career Path on Trailhead.

Resources

- Trailhead: Secure Development Lifecycle

- External Site: Realvnc: The Importance Digital Trust Should Have to Your Software Provider

- External Site: IEEE: Understanding and Building Trust in Software Systems

- External Site: OPTIV: Incorporating Secure Design Into Your Software Development Lifecycle

- External Site: NIST: Secure Software Development Framework (SSDF) Version 1.1: Recommendations for Mitigating the Risk of Software Vulnerabilities

- External Site: Fortinet: What Is DevSecOps?