Discover AI Techniques and Applications

Learning Objectives

After completing this unit, you’ll be able to:

- Identify practical use cases of AI.

- Identify the limitations of AI models and ChatGPT.

- Understand the data lifecycle for AI and the importance of data privacy and security in AI applications.

Trailcast

If you'd like to listen to an audio recording of this module, please use the player below. When you’re finished listening to this recording, remember to come back to each unit, check out the resources, and complete the associated assessments.

Artificial Intelligence Technologies

Artificial Intelligence is the expansive field of making machines learn and think like humans. And there are many technologies that encompass AI.

-

Machine learning uses various mathematical algorithms to get insights from data and make predictions.

-

Deep learning uses a specific type of algorithm called a neural network to find associations between a set of inputs and outputs. Deep learning becomes more effective and efficient as the amount of data increases.

-

Natural language processing is a technology that enables machines to take human language as an input and perform actions accordingly.

-

Large language models are advanced computer models designed to understand and generate humanlike text.

-

Computer vision is technology that enables machines to interpret visual information.

-

Robotics is a technology that enables machines to perform physical tasks.

Check out the Artificial Intelligence Fundamentals Trailhead module to learn more.

Machine learning (ML) can be classified into several types based on the learning approach and the nature of the problem being solved.

-

Supervised learning: In this machine learning approach, a model learns from labeled data, making predictions based on patterns it finds. The model can then make predictions or classify new, unseen data based on the patterns it has learned during training.

-

Unsupervised learning: Here, the model learns from unlabeled data, finding patterns and relationships without predefined outputs. The model learns to identify similarities, group similar data points, or find underlying hidden patterns in the dataset.

-

Reinforcement learning: This type of learning involves an agent learning through trial and error, taking actions to maximize rewards received from an environment. Reinforcement learning is often used in scenarios where an optimal decision-making strategy needs to be learned through trial and error, such as in robotics, game playing, and autonomous systems. The agent explores different actions and learns from the consequences of its actions to optimize its decision-making process.

AutoML and No Code AI tools like OneNine AI and Salesforce AI have been introduced in recent years to automate the process of building an entire machine learning pipeline, with minimal human intervention.

The Role of Machine Learning

Machine learning is a subset of artificial intelligence that uses statistical algorithms to enable computers to learn from data, without being explicitly programmed. It uses algorithms to build models that can make predictions or decisions based on inputs.

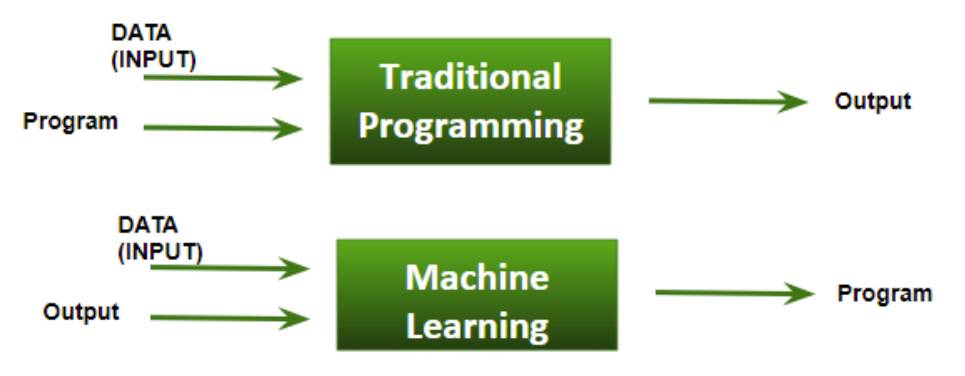

Machine Learning vs Programming

In traditional programming, the programmer must have a clear understanding of the problem and the solution they’re trying to achieve. In machine learning, the algorithm learns from the data and generates its own rules or models to solve the problem.

Importance of Data in Machine Learning

Data is the fuel driving machine learning. The quality and quantity of data used in training a machine learning model can have a significant impact on its accuracy and effectiveness. It’s essential to ensure that the data used is relevant, accurate, complete, and unbiased.

Data Quality and the Limitations of Machine Learning

To ensure data quality, it’s necessary to clean and preprocess the data, removing any noise (unwanted or meaningless information), missing values, or outliers.

While machine learning is a powerful tool for solving a wide range of problems, there are also limitations to its effectiveness including overfitting, underfitting, and bias.

-

Overfitting occurs when the model is too complex and fits the training data too closely, resulting in poor generalization.

-

Underfitting occurs when the model is too simple and does not capture the underlying patterns in the data.

-

Bias occurs when the model is trained on data that is not representative of the real-world population.

Machine learning is limited by the quality and quantity of data used, lack of transparency in complex models, difficulty in generalizing to new situations, challenges with handling missing data, and potential for biased predictions.

While machine learning is a powerful tool, it’s important to be aware of these limitations and to consider them when designing and using machine learning models.

Predictive vs Generative AI

Predictive AI is the use of machine learning algorithms to make predictions or decisions based on data inputs. This can be used in a wide range of applications, including fraud detection, medical diagnosis, and customer churn prediction.

Distinct Approaches, Different Purposes

Predictive AI is a type of machine learning that trains a model to make predictions or decisions based on data. The model is given a set of input data and it learns to recognize patterns in the data that allow it to make accurate predictions for new inputs. Predictive AI is widely used in applications such as image recognition, speech recognition, and natural language processing.

Generative AI, on the other hand, creates new content, such as images, videos, or text, based on a given input. Rather than making predictions based on existing data, generative AI creates new data that is similar to the input data. This can be used in a wide range of applications, including art, music, and creative writing. One common example of generative AI is the use of neural networks to generate new images based on a given set of inputs.

While predictive and generative AI are different approaches to artificial intelligence, they’re not mutually exclusive. In fact, many AI applications use both predictive and generative techniques to achieve their goals. For example, a chatbot might use predictive AI to understand a user’s input, and generative AI to generate a response that is similar to human speech. Overall, the choice of predictive or generative AI depends on the specific application and project goals.

Now you know a thing or two about predictive AI and generative AI and their differences. For your reference, here’s a quick rundown of what each can do.

Predictive AI |

Generative AI |

|---|---|

Can make accurate predictions based on labeled data |

Can generate new and creative content |

Can be used to solve a wide range of problems, including fraud detection, medical diagnosis, and customer churn prediction |

Can be used in a wide range of creative applications, such as art, music, and writing |

Limited by the quality and quantity of labeled data available |

Can generate biased or inappropriate content based on the input data |

May struggle with making predictions outside of the labeled data it was trained on |

May struggle with understanding context or generating coherent content |

May require significant computational resources to train and deploy |

May not be suitable for all applications, such as those that require accuracy and precision |

Limitations of Generative AI

Generative AI creates new content, such as images, videos, or text, based on a given input. ChatGPT, for example, is a generative AI tool that uses large language models to generate humanlike responses to text inputs. It works by training on large amounts of text data and learning to predict the next word in a sequence based on the previous words.

While ChatGPT can generate humanlike responses, it also has limitations—it may generate biased or inappropriate responses based on the data it was trained on. This is a common problem with machine learning models, as they can reflect the biases and limitations of the training data. For example, if the training data contains a lot of negative or offensive language, ChatGPT may generate responses that are similarly negative or offensive.

ChatGPT may also struggle with understanding user input context or generating coherent responses. ChatGPT is only as good as the data it’s trained on. If the training data is incomplete, biased, or otherwise flawed, the model may not be able to generate accurate or useful responses. This can be a significant limitation in applications where accuracy and relevance are important. Similar to other machine learning models, data plays a critical role, so if the data it’s trained on is bad, then ChatGPT will not be very useful.

The ChatGPT example demonstrates the critical role that data plays in using AI effectively.

Data Lifecycle for AI

The data lifecycle refers to the stages that data goes through, from its initial collection to its eventual deletion. The data life cycle for AI consists of a series of steps, including data collection, preprocessing, training, evaluation, and deployment. It’s important to ensure that the data used is relevant, accurate, complete, and unbiased, and that the models generated are effective and ethical.

The data lifecycle for AI is an ongoing process, as models need to be continuously updated and refined based on new data and feedback. It’s an iterative process that requires careful attention to detail and a commitment to ethical and effective AI. Developers and users of ML models should ensure that their models are effective, accurate, and ethical, and make a positive impact in the world. The data lifecycle is crucial to ensuring that data is collected, stored, and used responsibly and ethically.

These are the stages of the data lifecycle.

-

Data collection: In this stage, data is collected from various sources, such as sensors, surveys, and online sources.

-

Data storage: Once data is collected, it must be stored securely.

-

Data processing: In this stage, data is processed to extract insights and patterns. This may include using machine learning algorithms or other data analysis techniques.

-

Data use: Once the data has been processed, it can be used for its intended purpose, such as making decisions or informing policy.

-

Data sharing: At times, it may be necessary to share data with other organizations or individuals.

-

Data retention: Data retention refers to the length of time that data is kept.

-

Data disposal: Once data is no longer needed, it needs to be disposed of securely. This may involve securely deleting digital data or destroying physical media.

While AI and ML have the potential to revolutionize many industries and solve complex problems, it’s important to be aware of their limitations and ethical considerations. Continue to the next unit, to learn about the importance of data ethics and privacy.

Resources

- External Site: OneNine AI: AI Use Cases by Industry

- GitHub: Types of Deep Learning Models

- Trailhead: Artificial Intelligence Fundamentals

- Blog Post: What are ChatGPT’s limits?