Use a Secure Development Lifecycle

Learning Objectives

After completing this unit, you’ll be able to:

- Explain the importance of securing the development lifecycle.

- List prominent attacks (injection, XSS) that take advantage of lack of sanitization and validation.

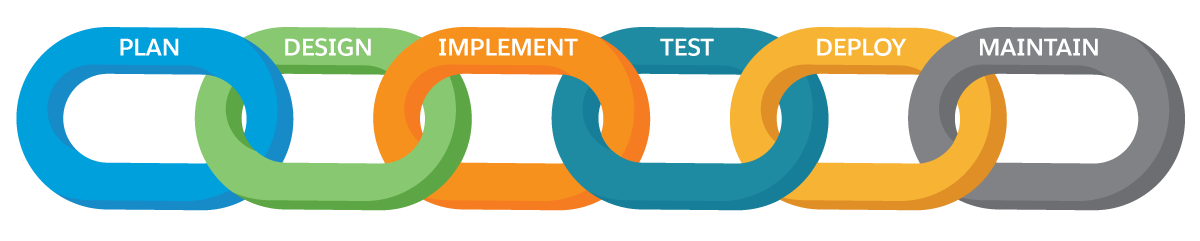

Protect the Software Development Lifecycle

- Plan what requirements and use cases an application will address.

- Design the application.

- Implement the application through coding.

- Test the application is functioning properly.

- Deploy the application to the customer.

- Maintain the application.

The preceding list denotes the six steps within a typical software development lifecycle (SDLC). If you have worked on an application development team before, you’re probably familiar with each phase of this cycle. But what you may not know is where the role of an application security engineer fits in. Does the engineer primarily test the security of the application before it’s deployed to the customer? Do they focus on maintaining the security of the application by patching critical vulnerabilities? Or do they suggest that security features be incorporated into the design?

The answer is that an application security engineer plays a critical role in each step of the SDLC. Because security issues can be introduced or discovered at any phase of an application’s lifecycle, the application security engineer has a continuous role to play in order to protect the confidentiality, integrity, and availability of the application’s data. Security is often thought of as a weakest link problem. Just as a strong metal chain can be broken if one link is compromised, each phase of the SDLC must be secured to secure the development, deployment, and maintenance of the application as a whole.

For example, during the implementation phase of the SDLC, vulnerabilities can be introduced into the code if developers fail to put in place checks that sanitize and validate inputs. This could allow an attacker to feed the application inputs that allow them to improperly access another user’s data or an administrative function.

Additionally, development teams often leverage open source code when developing applications. Open source code is collaboratively developed by many people working together on the Internet. While this code can be more secure due to the large number of developers working together to develop and review it, it also can sometimes contain vulnerabilities. Development teams need to maintain awareness and check these code libraries to be sure they’re not using components with known vulnerabilities.

In the world of agile development, where application development teams are continually pushing out new services and features on tighter timelines, attention to proper cyber hygiene and change control can often fall by the wayside.

Application security engineers work side by side with development teams at all phases of the SDLC. They serve as an advocate, consultant, and subject matter expert to ensure applications are designed securely, code is implemented with security validations in place, and that vulnerabilities are not introduced throughout the transition from development to quality assurance, to production.

Protect Against Injection and Cross-Site Scripting (XSS)

Securing the SDLC is especially important in protecting against two prominent and easily exploitable application security risks: injection and cross-site scripting (XSS). Think about the functionality of an application. One of the most important features of almost every app is the ability to store and retrieve data from a datastore (such as a database). Developers use coding languages to tell the application how to interact with the database based on user inputs. For example, when a user logs in to an application, they enter their username and password. When they press Enter, they trigger a database query, and if the query is successful, the user is able to access the system.

Unfortunately, every interaction a legitimate user has with an application can also be exploited by an attacker for malicious intent. An injection flaw occurs when an attacker sends untrusted data as part of a command or query. If developers have not validated/sanitized inputs properly, an attacker could execute unintended commands or access data without proper authorization. This can result in data loss, corruption, or disclosure.

This sounds pretty scary! But application security engineers have the awesome job of ensuring this type of thing doesn’t happen. They use a variety of tools and techniques to help the development team protect the application and their customers’ data from this type of attack.

- Source code review: A mostly manual process in which the application security engineer reads part of the source code to check for proper data validation and sanitization.

- Static testing: A software testing method that examines the program’s code but does not require the program to be executed while doing so. For example, static code analysis may check the data flow of untrusted user input into a web application and check whether the data executes as a command (which is an undesired result).

- Dynamic testing: A software testing method that examines the program’s code while the program runs.

- Automation testing: Uses test scripts to evaluate software by comparing the actual outcome of the code to its expected outcome.

- Separation of commands and queries: Keeps data separate from commands and queries. The idea is that every method should either be a command that performs an action or a query that returns data to the caller, but never both. A query should never change the state of an application or its data and a command can change the state, but should never return a value. This prevents an attacker from supplying operating system commands through a web interface in order to execute those commands and upload malicious programs or obtain passwords.

- Allowlists: Implementing allowlists of allowable characters or commands can validate user input and ensure that URL and form data can’t execute commands that use these characters or execute unallowed commands. For example, an allowlist may escape or filter special characters such as ( ) < > & * ‘ | = ? ; [ ] ^ ~ ! . ” % @ / \ : + , `

- And more: Mitre’s Common Weakness Enumeration (CWE) provides a good resource on other considerations when protecting against command injection.

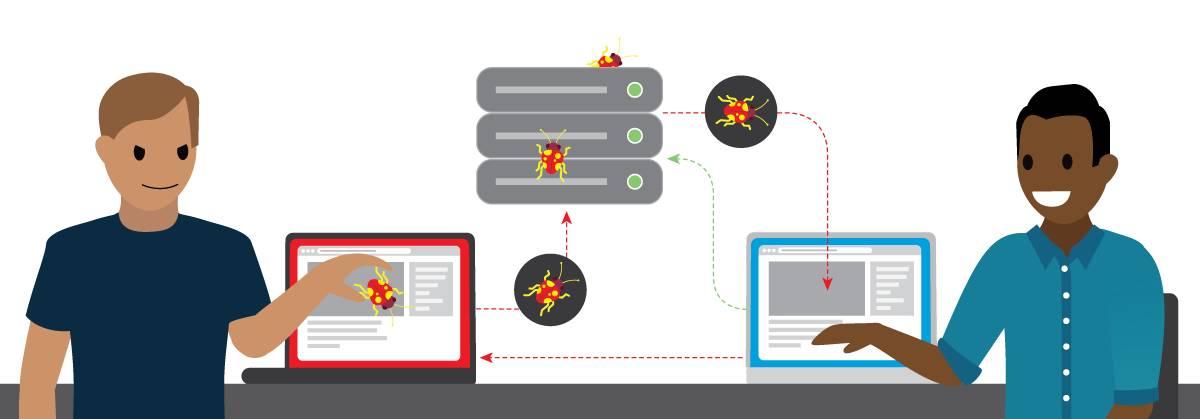

In addition to injection, another risk of failing to secure the SDLC through validation and sanitization of data, and testing and review of code is that the application may be vulnerable to cross-site scripting (XSS). XSS occurs when an application includes untrusted data in a new web page without proper validation.

The image illustrates the flow of how XSS occurs. First, an attacker injects malicious code into a trusted website in order to send that code to an unsuspecting user. Second, the web page saves the malicious script to a database. Next, the victim visits the website and requests data from the server, believing it to be safe. Then, the data containing the malicious script passes back through the webpage server and to the user’s computer, and executes. Finally, it calls back to the attacker, compromising the user’s information, all while the user believes they are engaged in a safe online session with a trusted website.

By executing malicious scripts in a victim’s browser using XSS, a malicious actor can hijack a user’s session, deface a website, or redirect the user to a malicious site where they may be prompted to download malware or enter their credentials for the attacker to steal. The result can be that the victim’s computer becomes infected with malware, they may have their sensitive information stolen, or their computers may become part of botnets that attackers can use to execute distributed denial of service attacks which flood an organization’s network with traffic, limiting access for legitimate users.

Application security engineers need to be concerned about XSS because it is an easily exploitable attack vector and is a widespread security weakness that hackers can take advantage of using freely available and automated tools.

One estimate found that vulnerability to XSS is found in two-thirds of all applications. While this sounds scary, it also means that application security engineers have an exciting and important role to play in securing the SDLC. In doing so, engineers ensure that the application validates user input and does not store unsanitized input that can be viewed at a later time by another user or admin. They do this by separating untrusted data from active browser content, using coding frameworks that automatically escape XSS by design, and applying context-sensitive encoding.

Sum It Up

You’ve been introduced to several important considerations to help protect applications and their data throughout the SDLC. Now it’s time to learn more about another key consideration for an application security engineer: properly configuring application components.

Resources

- PDF: OWASP Proactive Controls for Developers

- External Site: OWASP Testing for SQL Injection

- External Site: OWASP Injection Prevention Cheat Sheet

- External Site: OWASP Cross Site Scripting Prevention Cheat Sheet