Plan Your Trust Strategy

Learning Objectives

After completing this unit, you’ll be able to:

- Create a risk profile by assessing AI risks for your project.

- Implement guardrails based on the risk profile.

Trailcast

If you'd like to listen to an audio recording of this module, please use the player below. When you’re finished listening to this recording, remember to come back to each unit, check out the resources, and complete the associated assessments.

Get Started with Trust

AI comes with certain risks. So how do you know your AI solution is ethical, safe, and responsible? By creating a trust strategy.

Your project’s trust strategy is your plan for mitigating risks to trust, such as keeping sensitive data secure, meeting regulatory requirements, and protecting your company’s reputation.

The first step in defining your trust strategy is to create a risk profile for your project. A risk profile informs how you manage and prioritize a project’s risks. It covers the type and level of risks, how likely negative effects are, and the level of disruption or costs for each type of risk. An effective risk profile tells you which risks to prioritize.

Areas of Risk

Answer questions in three main risk areas to create your risk profile: data leaks, regulatory requirements, and reputation harm. As an example, see how Becca answers these questions for her project.

Risk Area |

Description |

Questions to Ask |

Becca’s Response |

|---|---|---|---|

Data Leaks |

Data leaks happen when secure information is accidentally exposed. It can happen because of cyberattacks, unauthorized access, and exposing sensitive data to external AI models. To prevent data leaks, store your data securely and make sure only authorized users can access it. This risk area also includes protecting sensitive data, such as personal identification information (PII). |

|

Becca knows that Coral Cloud has a secure system for data storage. However, her project includes a human-in-the-loop, or a human interaction, where someone triggers the check-in process by telling Einstein to check in a guest. Becca sees that there’s a chance for data leaks here, because the executing user can find out where the guest is staying and for which dates. That’s dangerous information if it’s leaked to an unauthorized person. Becca’s project includes sensitive data such as full names, contact information, and credit card information. |

Regulatory Requirements |

Make sure your project meets the legal requirements for the jurisdiction and industry you’re operating in. If you don’t meet all regulations, you could face fines, reputation damage, and other legal repercussions. The legal team you identified in the first unit can help you identify and meet all regulations. |

|

Since Coral Cloud operates in the EU, Becca’s project needs to follow the General Data Protection Regulation (GDPR). Becca reaches out to the legal team to ask them to research what other regulations she needs to follow. |

Reputation Harm |

Since your AI project is interacting with your customers, it represents the company’s voice. AI can sometimes generate harmful or untrue responses because of dataset toxicity or technical attacks. |

|

If the AI-generated welcome emails include offensive language, Coral Cloud will be seen as insulting and unfriendly. They’ll lose valuable current and potential customers. In the hospitality industry, Becca knows reputation loss is a serious problem. |

Create a Risk Profile

Based on her evaluation of the different risk areas, Becca creates a risk profile for her project. 1 represents a very low risk, 5 represents a very high risk.

Risk Type |

Level of Negative Effects |

Likelihood of Negative Effects |

Disruptions or Costs |

|---|---|---|---|

Data Leaks |

4: If data leaks, guest safety and privacy is harmed. |

3: Coral Cloud’s data is stored securely, but other controls should be put in place to further address this risk. |

4: Loss of customer trust |

Regulatory Requirements |

5: Coral Cloud faces fines, other legal consequences, and loss of customer trust. |

5: If Becca’s project doesn’t meet regulations, it’s very likely Coral Cloud would face negative consequences. |

5: Legal consequences, fines, and loss of customer trust |

Reputation Harm |

5: If Coral Cloud’s reputation is harmed, they lose potential guests and profit. |

4: Becca needs to refine her prompt and implement other ways to protect against reputation harm. |

4: Loss of customer trust |

Based on the risk profile, Becca decides to prioritize regulatory requirements first, then reputation harm, then data leaks. She begins planning how to manage each risk.

Types of Guardrails

Now that you know your risks, implement AI guardrails. AI guardrails are mechanisms to ensure your AI project is operating legally and ethically. You need guardrails to prevent AI from causing harm with biased decisions, toxic language, and exposed data. Guardrails are necessary to protect your project from technical attacks.

There are three types of AI guardrails—security guardrails, technical guardrails, and ethical guardrails.

Security Guardrails

These guardrails ensure that the project complies with laws and regulations, and that private data and human rights are protected. Common tools here include secure data retrieval, data masking, and zero-data retention.

Secure data retrieval means that your project only accesses data the executing user is authorized to access. For example, if a person with no access to financial records triggers a response from the AI model, the model shouldn’t retrieve data related to financial records. Data masking means replacing sensitive data with placeholder data before exposing it to external models. This makes sure that sensitive data isn’t in danger of being leaked. Model providers enforce zero-data retention policies, which means that data isn’t stored beyond the immediate needs of the task. So after a response is generated, the data used disappears.

Technical Guardrails

These guardrails protect the project from technical attacks by hackers, such as prompt injection and jailbreaking, or other methods to force the model to expose sensitive information. Cyberattacks can cause your project to generate untrue or harmful responses.

Ethical Guardrails

These guardrails keep your project aligned with human values. This includes screening for toxicity and bias.

Toxicity is when an AI model generates hateful, abusive, and profane (HAP) or obscene content. Bias is when AI reflects harmful stereotypes, such as racial or gender stereotypes. As you can imagine, that’s a disaster! Since AI learns its responses, toxicity and bias could be a sign that your data is introducing unwanted language and ideas to your model. Toxicity detection identifies responses that might have toxic language, so you can review it manually and make adjustments to reduce toxicity.

Now you know what guardrails are and how they protect your project from risk. Next, look at how Becca implements guardrails in her trust strategy.

Coral Cloud’s Trust Strategy

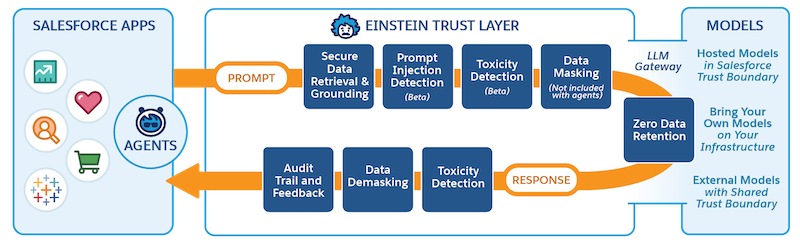

Implementing your trust strategy looks different depending on the system you’re using. Since Becca’s using Salesforce, her trust strategy is built on the Einstein Trust Layer. The trust layer is a collection of features, processes, and policies designed to safeguard data privacy, enhance AI accuracy, and promote responsible use of AI across the Salesforce ecosystem. When you create a project with Einstein Generative AI features, such as Prompt Builder in Becca’s project, the project automatically passes through the trust layer.

Becca defines a trust strategy that addresses each risk area, using features in the trust layer. She also visualizes the desired outcome. Here’s an excerpt from her trust strategy.

Risk Area |

Becca’s Trust Strategy |

Features in the Trust Layer |

Outcome |

|---|---|---|---|

Data Leaks |

|

|

|

Reputation Harm |

|

|

|

Before Becca finalizes her trust strategy, she meets with her legal and security team to make sure there are no gaps.

Next Steps

With a trust strategy under her belt, Becca’s done planning her project! She’s ready to begin the Build stage, where she’ll implement her project in Salesforce. Keep following Becca’s project in Connect Data 360 to Agentforce and Prompt Builder.

As you build your project, keep in mind that mitigating risk isn’t a one-and-done task. After you implement your project, monitor and audit your risk on an ongoing basis. Monitoring helps you identify problematic trends early and make adjustments. Review toxicity scores and feedback to identify where your solution went wrong, and make adjustments to eliminate risk factors.

Summary

This module explains the vital steps when preparing for your AI project. Now you know how to identify project stakeholders, set goals, and choose a technical solution. You understand how to prepare high-quality data and meet your project’s requirements. And you know how a trust strategy mitigates risks for your project and why it’s important to monitor risk.

With your new knowledge, you’re well on your way to launching a successful AI project! Before you start your rollout, be sure to complete Change Management for AI Implementation.

Resources

- PDF: NIST Artificial Intelligence Risk Management Framework

- Excel Spreadsheet: NIST AI Risk Assessment Template

- PDF: Building Trust in AI with a Human at the Helm

- Trailhead: Change Management for AI Implementation

- Trailhead: The Einstein Trust Layer

- Article: AI-Powered Cyberattacks

- Article: Understanding Why AI Guardrails Are Necessary: Ensuring Ethical and Responsible AI Use

- PDF: Mitigating LLM Risks Across Salesforce’s Gen AI Frontiers